This page seeks to give a high-level overview of the design of the Python-based HWRF scripting system, known as the pyHWRF. We discuss the overall structure of the system, and provide links to other parts of this wiki for more details on each individual layer.

Purpose

Prior to this rewrite, there were many different scripting systems to run the HWRF model, which led to wasted manpower maintaining parallel versions and tracking down reasons for unexpected differences in the forecast results. Part of the reason for that is that all of the scripting systems were written with monolithic structures, with designs that made it difficult to adapt to new batch systems, workflow management systems, operating systems, or HWRF configurations. Hence, every organization needed its own scripts to run the HWRF, and some organizations needed several scripting systems. The pyHWRF project aims to solve this problem, by creating an object-oriented, layered scripting system that minimizes the amount of work needed to modify it.

Overall System Design

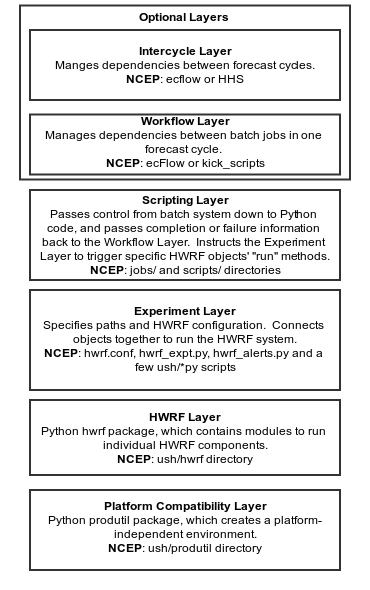

The pyHWRF system was divided into several layers, where each layer never interacts with layers above it. This is done to simplify both adding new modeling or post-processing functionality, and porting to other platforms. By completely separating portability issues, setting paths, and modeling aspects of the implementation into independent layers, we avoid having to modify all aspects of the functionality when modifying only one. A diagram of this "division of labor through layering" is below:

The remainder of this page documents these layers at an abstract level, and provides links to additional information.

Optional High-Level Layers

These high-level layers handle various levels of automation, which are not always needed depending on the application.

Intercycle Layer

The HWRF system, like most forecasting systems, takes input for a particular analysis time, and then provides a forecast for what the atmosphere and ocean will do for some amount of time afterwards. Each set of jobs run for one analysis time is referred to as a cycle. The job of the Intercycle layer is to handle interactions between several cycles, which generally involves complex dependencies such as files transferred between them, archiving to tape, ensuring output is scrubbed so there is always disk space available, and so on. This is the job of the Intercycle layer. In the case of NCEP Central Operations (NCO), which runs the operational HWRF, the Intercycle layer is a human operator overseeing some automation scripts. For the EMC parallels, it is the HHS automation system. For DTC, and for EMC in the future, it is the Rocoto automation system. For a case study or when simply debugging, this layer is not needed at all, but can sometimes be convenient. For any large retrospective test of many storms, or for an automated real-time forecast of many cycles, this layer is critical.

Workflow Layer

When running the HWRF in an automated manner, one must split the work into multiple batch jobs, which run part of the HWRF system on some supercomputer compute nodes. Certain pieces of the HWRF system cannot start until certain others finish. For example, the forecast job requires the initial and boundary conditions. The Workflow layer handles this work. Strictly speaking, this layer is not needed: one can run the pyHWRF in an interactive batch job, and the developers of this system test in that manner. However, it is generally easier and less error-prone to use a workflow layer of some sort.

High-Level Layer Documentation

- Intercycle and Workflow Layers – describes the available and planned implementations of the optional top two layers of the system, which implements the interaction with the batch system

- ecflow HWRF – the automation system used by NCEP Central Operations to run the operational HWRF. It is based on the ECMWF ecflow automation system.

- HHS and kick_scripts – the system used by the NCEP Environmental Modeling Center (EMC) to automate EMC HWRF simulations for the past few years.

- Rocoto HWRF – Rocoto is a NOAA-originated workflow management system with capabilities similar to ecflow. EMC plans on using this instead of the HHS+kick_scripts combination.

Scripting Layer

To run any scripts on a supercomputer, one generally has to load certain programs or libraries into one's environment, and ensure that the filesystems required by the scripts are available on the compute node in use. In addition, when connecting HWRF to a workflow layer (see above), some additional work is needed to pass information such as file and executable locations, to the next lower layer, the Experiment Layer (see below). This is the job of the Scripting Layer. This layer is optional: it can be done manually by the user, and the system has been tested in that way, but is laborious when done manually.

Scripting Layer Documentation

- Scripting Layer – more documentation on the Scripting Layer

- NCEP jobs/scripts/ush – the Scripting Layer used by the operational HWRF

Experiment Layer

This layer consists of one or more files, depending on the Intercycle, Workflow and Scripting Layers in use (see above). Its purpose is to configure the HWRF system, and when relevant, provide the Scripting Layer with a way of accessing all of the Python objects. Specifically, it consists of:

- hwrf.conf – mandatory: this UNIX conf file is used by the HWRF Layer (see below) to configure paths, Fortran namelists, and other information

- hwrf_expt.py – mandatory if a Scripting Layer is in use: a Python module that describes the entire object structure.

- hwrf_alerts.py – a Python module used by Scripting Layers used in NCEP to describe certain delivery methods specific to NCEP operations.

- hwrf_generate_vitals.py – a script that generates a list of cycles that are known to exist for a particular storm of interest. This script is meant to be used by the Scripting, Workflow or Intercycle layer to get the list of cycles for automation purpose. The result is then cached and sent down to the HWRF layer for such purposes as generating a cold wake, or verifying cycling. It is also intended to be run by scripts running entirely outside the HWRF system: it is smart enough to set paths appropriately. It does require the

hwrfandprodutilpackages though, as described below.

Experiment Layer Documentation

- FIXME: Insert links to Experiment Layer pages.

HWRF Layer: Python hwrf Package

This is the layer that runs the actual HWRF itself, based on configuration sent in by the Experiment Layer (see above). It works by having a set of interconnected Python objects, each of which knows how to run a small piece of the HWRF, such as the ocean initialization or forecast. The Experiment Layer constructs this tree of objects, typically in the hwrf_expt.py, and that gives a way for the Scripting Layer to run each component. This layer makes up the vast majority of the source code. In essence, the rest of the layers exist to simplify, and run, this layer.

HWRF Layer Documentation

- FIXME: Add link to high-level

hwrfpackage documentation - FIXME: Add link to Python docstring documentation

Platform Compatibility Layer: Python produtil Package

This layer sits on top of the Python standard library, providing a platform-independent and version-independent environment in which to run Python scripts. Unlike other layers, it is intended to be accessed by all layers above it. In addition, it is designed to be able to be separated from the HWRF system. It is completely general and contains nothing HWRF-specific.

Platform Compatibility Layer Documentation:

- Package produtil

- FIXME: Add link to Python docstring documentation.