Data Analysis Services Group - April 2011

News and Accomplishments

xxx

VAPOR Project

Project information is available at: http://www.vapor.ucar.edu

XD Vis Award:XD Vis Award:

Alan merged the 2.1 source tree branch back into the main trunk. We will continue on this until our next release.Alan merged the 2.1 source tree branch back into the main trunk. We will continue on this until our next release.

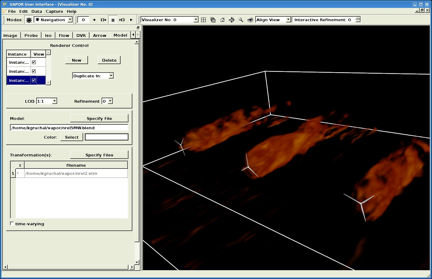

The team had several meetings to discuss the extension API design. We regarded the design and the documentation as too complicated. It was simplified and then we asked Kenny Gruchalla (NREL) to review it. Kenny went ahead and implemented a renderer extension to visualize turbine blades in a wind simulation. He did this in only 3 days! The following is a screen-shot he provided us, showing a vapor volume rendering combined with the turbine blades:

Alan implemented a Box class as an embedded extension class, and eliminated all of the old style box access methods. The new Box class is based on an XML representation, and includes the ability to change the extents over time and to orient in arbitrary directions. This also is the first working example of an embedded extension class that is built on the XML representation. Its implementation exposed a few bugs that we had not encountered before.

TG GIG PY6 Award: Yannick made progress on a number of fronts:

Completed primary PIOVDC build system development

Completed primary PIOVDC development

Completed PIOVDC/VDF Code Merge

Testing Code Merge (current activity)

Testing PIOVDC/GHOST integration (current activity)

KISTI Proposal:

After reviewing NCAR's proposal KISTI requested a number of minor modifications to the budget and schedule of deliverables. A revised budget and WBS has been submitted back to KISTI for another round of reviews.

Development:

We reviewed the color mapping conversions that Kendall has been working on. We found that the conversion does not work well if there is a large number of control points. It works fairly well if there are no more than 10 control points, however that will require some manual tweaking to get good color maps.

Alan has been implementing Python versions of useful WRF derived variables, as recommended by Sherrie Fredrick. Most of these variables are available in NCL and are implemented in FORTRAN. However some of them are based on a layered representation of the data, which is not currently accessible from Python. At this point we have converted all but three of the WRF derived variables that Sherrie Fredrick requested.

Alan found some bugs in opening sessions with multiple visualizers; some of these bugs were introduced since the 2.0 release. Most of these bugs were fixed, but there remain a couple of issues to resolve, problems that were already in the 2.0 code.

Yannick and John began investigating a bug in the isosurface renderer that appears on newer graphics cards and results in incorrect hidden surface removal (Z-buffering)

Kendall and John have been working to resolve a compatibility problem in the Mac builds: binaries built under Mac OS 10.6 will not run under 10.5.

Kendall started working on updating the ascctf2vft program to allow the conversion of NCL colormaps to VAPOR transfer function format.

Work continued on prototyping an abstract data type to represent Cartesian gridded data in VAPOR. For performance reasons that are becoming obsolete with microprocessor advances, the current VAPOR implementation simply represents gridded data as arrays of floats. An C++ class object could greatly simplify numerous aspects of the code, improve reliability, and provide a clean path for handling missing data. Efficient C++ Standard Template Library iterators for sequential data access were implemented, and their performance compared against conventional C++ method access.

Outreach and Consulting:

Alan met with Mel Shapiro to visualize the Erica storm. Mel is interested in using VAPOR to present his results in some upcoming speaking invitations. He identified a number of derived variables that will be needed to place flow seed points. He also reitereted his desire to have isolines for his analysis. He would also like to have isolines superimposed on a colormapped planar image. Mel contacted his associate, Ryan Maue, to obtain the higher resolution WRF output of the Erica storm. We are in the process of downloading this data, which will total nearly a terabyte of data.

Documentation:

Kendall continued work on a drupal-based documentation skeleton that will be used to help guide VAPOR's user documentation overhaul.

Misc:

Alan and John attended the DOECGF conference in Asheville, NC. John presented the NCAR site report and Alan presented a VAPOR progress report. This conference is useful because we learned of many efforts at other labs that overlap with our work. We found that there is a project at Los Alamos to visualize POP output and we may benefit by sharing experience with that team.

We continue to respond to queries on the VAPOR mailing list.

Software Research Projects

Feature Tracking:

Climate data compression:

Data Analysis & Visualization Lab Projects

File System Space Management Project

- Started evaluating Robinhood as a possible option to the GPFS "fileset" option. Checked the robinhood release status and features. The "class" configuration option within a file system could be used to maintain separate sets of sub-directories to keep track of usage stats with customized policies. For physical bound on quota limits, we would still need an external script to block further writes, but the flexibility and response time of "rbh-report" tool can be a usable option, especially with Lustre 2.0 versions (By directly reading the ChangeLog feature in 2.0, it can skip the file system scans)

- Began to test the robinhood software as a means of collecting accounting data for the new GLADE projects and allocations. Initial tests show we can scan both proj2 and proj3 file systems within 4 hours.

Visualization Test Bed Project

- xxx

Accounting & Statistics Project

- Started evaluating Robinhood as a possible option to the GPFS "fileset" option. Checked the robinhood release status and features. The "class" configuration option within a file system could be used to maintain separate sets of sub-directories to keep track of usage stats with customized policies. For physical bound on quota limits, we would still need an external script to block further writes, but the flexibility and response time of "rbh-report" tool can be a usable option, especially with Lustre 2.0 versions (By directly reading the ChangeLog feature in 2.0, it can skip the file system scans)

Security & Administration Projects

- Deployed initial version of the distributed DASG KROLE management utilities. This gives us the ability to feed the KROLE principal creation email directly to a kroleinit program, running on a secure system, to acquire the credential and forward it off to the kroled daemon for installation. Kroled also handles the automatic credential renewal nightly and sends email when credentials will exipre shortly and they will need to be reinitialized. Determined that the Cisco VPN client on OS X prevents Kerberos client/server interactions from working in some situations.

- Started work on an code to retrieve information from the LDAP ACC8 database to gather authoritive user and group information for DASG system administration and KROLE management.

- Integrated SSG KROLE credential management into a common KROLE "island" with the DAV clusters to support HPSS access from lynx, bluefire and firefly.

System Monitoring Project

- xxx

CISL Projects

GLADE Project

- xxx

Lustre Project

- Built a small Lustre server using 2.0 distribution on swtest1 machine. The primary goal was to test the interoperability with older versions.

Confirmed that 2.0 versions cannot mount the 1.8 servers. Unlike past cases where 1.8 clients could mount older 1.6 servers and the other way around. - 1.8 clients can mount 2.0 servers as promised by Lustre developers. 1.6 clients (currently CLE2.2 on lynx) cannot mount the 2.0 server, implying that

external file system servers in version 2.0 will not be compatible with CRAY until CLE3.1. - Performed tests with Lustre 2.0 client on sleet and Lustre 1.8.5 client on magic and they work as expected.

- Following a suggestion that a transient Lustre setup with memory-resident OST's can deliver a significant boost to Data Analysis programs, looked into the feasibility of the option. Main constraint will be the OST size which will be too small even with enough physical memory. (typically upper-bound of 512M range, which will not be feasible for ~10GB OST's even after SW raid0)

- Lustre User Group meeting

- Learned of the fragmentation in Lustre community since LUG10: OpenSFS, HPCFS, EOFS, Whamcloud and Xyratex

- Site updates: GAIA of CRCM(NOAA) at ORNL, Fusitsu, TSUBAME of Tokyo Institute of Technology

- Technical trends: Developers who used to be at Sun Microsystem are now at Xyratex and Whamcloud

- During the meeting, the five organizations came to an agreement to support whamcloud as the release authority for the 2.1 version (First one since the last Oracle release). Future after 2.1 is still uncertain. Had a separate meeting with Whamcloud (Dan Ferber and Bryon Neitzel): Whamcloud is not clear on the "direct" support contract yet.

Data Transfer Services Project

- Examined the "Globus Online" portal documentation to see if we need to do anything on our servers to permit users to use Globus Online to manage transfers. It does not appear we have to do anything.

GridFTP/HPSS Interface

- xxx

TeraGrid Project

- xxx

Lynx Project

- xxx

Batch Systems & Scheduler Project

- xxx

NWSC Planning

- Continued evaluation of vendor proposals.

- Summarized the I/O benchmarks submitted by vendors.

- Continued with transition planning for GLADE. This includes planning for the movement of space hosting RDA data to new storage at the NWSC.

Production Visualization Services & Consulting

- xxx

Publications, Papers & Presentations

- xxx

System Support

Data Analysis & Visualization Clusters

- Brought systems down and back up for the annual Spring power down.

- Fixed Kendall's access to the /glade/proj3 file system after his Mac was switched to using datagate3 instead of blizzard for Kerberized NFS. Helped Alan and John diagnose some NFS issues on their Mac also.

- Installed java1.6 on all systems in order to support the IDE for the Intel compilers.

- Installed gcc44, unzip60, and Unidata IDV on all systems due to request from users.

- Provided system and license information to Dorothy Bustamante for the IBM audit.

GLADE Storage Cluster

- Brought systems down and back up for the annual Spring power down.

- Created spaces on GLADE and updated the group information for the RAL, MMM, and CSL allocations.

- The following project spaces were removed: dasg016, dasg014, dasg012, and dasg011.

- Replaced the "jake card" (SATA bridge chip card) in the C channel drawer of the DDN 9550 storage unit and rebuilt all failed drives.

- Worked with SSG to mount the GLADE filesystems on Firefly natively, instead of using NFS.

- Ran several performance tests on the DDN 9900 from several hosts to estimate the speed of copying data to Wyoming.

TeraGrid Cluster

- Re-enabled and started the sendmail daemon on both twister systems.

- Updated all packages and the rkhunter software on both twister systems.

- Modified artoms to send twister backups to /glade/scratch instead of frost's /ptmp

Legacy MSS Systems

- Finished equipment decommissioning activities.

Data Transfer Cluster

- Adjusted datagate firewalls to let Chi-fan transfer data from here to OSC. GridFTP behaves differently if the paralled protocol is used instead of the basic FTP style transfer.

Other

- xxx