The 7m TRH has been sick since about Jun 23. It looks like a bad RS232 connection. The data is good for periods of time, then garbage.

Here's a bit of data where it was in a and out:

data_dump -A -i 1,220 manitou_20100627_120000.dat | more 2010 06 27 13:44:10.4165 22.59 20 \xfc\xfe1\xfe~3f\xf04984\xe02929\r\n 2010 06 27 13:44:12.0258 1.609 26 \xfc\xfe10.\xf08\xe088\xee33 4985 2929\r\n 2010 06 27 13:44:13.6407 1.615 29 T\xde\xf8?\xfe10.08 88.30 4985 2928\r\n 2010 06 27 13:44:15.2587 1.618 31 TRH009 10.06 88.30 4984 0928\r\n 2010 06 27 13:44:16.8703 1.612 31 TR\xf8\xf00\xff\xe010.06 88.33 4984 \x00929\r\n 2010 06 27 13:44:18.4900 1.62 30 TR\xf80\x06\xff10.06 88.35 4984 0830\r\n 2010 06 27 13:44:20.1026 1.613 62 TRH009\xe010.04 88.37 4982 09\xc0\x8b\xd6\x98\xf8TR\xf800\xf9\xe010.05 88.48 4 983 093\x05\r\n

On May 13, two sapflow sensors were installed on each of 12 trees approx. 50 m SE of the turbulence tower. Heat flux data will be downloaded at approx. 2-week intervals, and anyone interested in the data should contact Jia Hu (jiahu@ucar.edu). At some point, heat fluxes will be converted to sapflow data and available to help constrain water flux measurements made at the turbulence tower. Sometime in the next couple of weeks, soil moisture sensors (3 depths) will also be installed at two locations within the sapflow array.

16m TRH has been missing since May 21, 2010 and acting up since as early as May 11.

Upon arrival, there was no fan operating on the 16m TRH. Determined that the coupling between the 5 and 15m cables going to the 16m TRH had taken on water and/or was corroded. Replaced 15m cable (upper section) of the cabling to the 16m TRH due to the corrosion. Bottom 5m cable should be replaced too. Kurt taped the current coupling and inserted a drip loop. Should be ok for a while. Verified that the TRH was now operational and that data was now being collected.

Also replaced TRH external housing (shield, fan, firmware, etc), but used the original SHT sensor in this new housing.

Work today finalized by about 1pm LT.

On site: Kurt Knudson and Ned Patton

Just wanted to mention that we're looking into the butanol issue with seatainer 4. Butanol is used for the particle counters, and we are attempting to get rid of as much of the vapors as possible.

ACD had a catastrophic failure of the laptop controlling the particle size instrument. Last good data from that is May 3rd at 9:10pm. That system is down until we can replace the computer.

Karl Schwenz, Chris Golubieski, Gordon Maclean

Objectives:

- Diagnose and fix reason that system has been offline for last few days (no data in Boulder after April 27).

- Replace data system box in order to take the old box back to Boulder for a tuneup.

- Replace top TRHs which have not been working well. Add new radiation shields.

- Replace barometer with external unit.

- Make anti-climb door easier to latch.

Arrived at site, May 4 10:30.

Chris climbed tower and replaced the TRH transducer/PIC units, and replaced the radiation shields with picnic plates .

height |

previous |

new |

|---|---|---|

2m |

TRH012 |

no change |

7m |

TRH011 |

TRH009 |

16m |

TRH015 |

TRH004 |

30m |

TRH013 |

TRH001 |

43m |

TRH016 |

TRH006 |

Replaced the barometer. New unit is B1 with chassis outside of the DSM. Old was B9, inside the DSM.

Karl fixed the anti-climb door. Works like a breeze now. If door is ever difficult to latch give a hand knock on the bottom right corner to shift it to the left. Hinge bolts should be replaced with correct size on a future visit.

System was up and taking data. (Reporting about 1000 spurious interrupts per second, then later 500 after Chris replaced some TRHs). The pocketec disk has data files for April 28 to the present, so no loss of data. Could not ping manitou server, 192.168.100.1, at other end of fiber link. Power cycling the fiber/copper media converter at the tower did not help. In retrospect I should have also cycled power on the 5 port switch. Cycling power on the media converter in the seatainer also did not bring it back. Only the power LED was lit on the converter at the tower, not SDF (signal detect fiber), SDC (signal detect copper), or RXC/F (receive copper/fiber). I am not sure whether the SDC LED was on in the seatainer.

Site note: There is a strong smell of what I believe is butanol in the seatainer where the turbulence tower fibers are terminated. This is the first seatainer as one drives into the site. There are several butanol bottles in that seatainer.

The manual for the fiber/copper network converters (Transition Networks, model E-100BTX-FX-05) describes the dip switch options. I've cut and pasted some of the manual to the end of this entry. It seems that we should disable the link pass-through and far-end fault options on both converters. Link pass-through and far-end fault options can cause a copper interface to be disabled if a fault is detected on the remote copper interface or on the fiber. These options appear to be for a building network with good network monitoring equipment. Perhaps a power outage, cable disconnect, or powering down a network switch could cause the converters to think there is a fault in the copper ethernet or the fiber, and then the copper interfaces would be disabled. The manual says nothing about whether the interfaces are automatically brought up if the fault disappears.

Dip switch 1 is auto-negotiation of speed and duplex and that was left enabled=UP. Set switches 2, 3 and 4 DOWN on both converters, to disable pause control, link pass-through and far-end fault.

Pause control should be enabled if ALL devices attached to the media converters have it. I didn't know if the ethernet switches have it, so I disabled it (dip switch 2 DOWN). I now think that was a mistake and that all modern ethernet switches have pause control. So I suggest that we set switch 2 UP on both units, and power cycle them on the next visit.

With 1=UP, and 2,3,4=DOWN and power cycling both units, the link came back and we could ping the seatainer. Swapped data boxes and then couldn't ping. Eventually power cycled the 5 port switch at the tower and things worked again.

New data box is swapped in and all sensors are reporting. Taking the original pocketec back to Boulder so the system at the tower now has a different pocketec unit.

From the manual for the converters:

Pause Control

The Pause feature can improve network performance by allowing one end of

the link to signal the other to discontinue frame transmission for a set period

of time to relieve buffer congestion.

NOTE: If the Pause feature is present on ALL network devices attached to the

media converter(s), enable the Pause feature on the media converter(s).

Otherwise, disable the Pause feature

Link Pass-Through

The Link Pass-Through feature allows the media converter to monitor both the

fiber and copper RX (receive) ports for loss of signal. In the event of a loss of

an RX signal (1), the media converter will automatically disable the TX

(transmit) signal (2), thus, “passing through” the link loss (3). The far-end

device is automatically notified of the link loss (4), which prevents the loss of

valuable data unknowingly transmitted over an invalid link.

near-end <-1-> local media -2-> remote media <-3-> far end

device converter <-4- converter device

original fault local media cvtr remote disables

on copper link sends loss signal ethernet device

(1) over fiber (2) (3)

Far-End Fault

When a fault occurs on an incoming fiber link (1), the media converter

transmits a Far-End Fault signal on the outgoing fiber link (2). In addition the

Far-End Fault signal also activates the Link Pass-Through, which, in turn,

disables the link on the copper portion of the network (3) and (4).

original

fault on

fiber (1)

near-end <-4-> media -1-> media converter <-3-> far end

device converter <-2- converter device

A B

media converter media converter B

A disables copper detects fault on (1),

link (4) disables the copper (3)

sends far-end fault

signal to A over fiber (2)

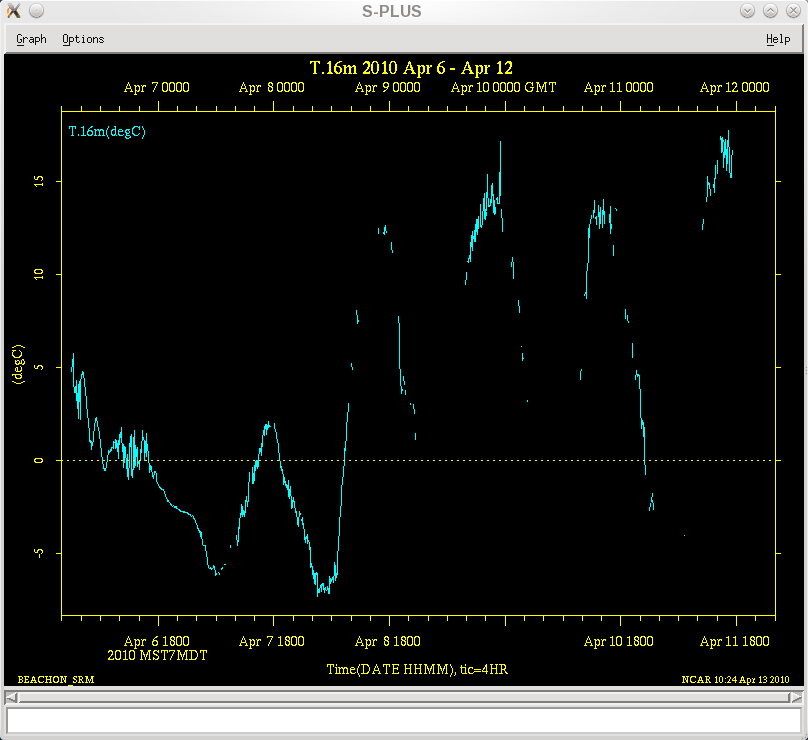

TRH at 16 meters has had sporadic problems since Apr 8 07:00 MDT.

Over Apr 10,11 and 12 it seems to come alive in the warmest part of the day,

(at temps over 10C) and then fails as the temp falls in the evening. See plot below.

Here is a dump of some recent data (times in GMT).

Looks like the processor is getting reset, printing out its calibration

information when it boots. Perhaps the power is dropping out?

Corroded connection?

data_dump -i 1,320 -A manitou_20100411_000000.dat | more

2010 04 11 00:56:36.0258 0.3326 30 TRH015 7.77 36.73 4758 1178\r\n 2010 04 11 00:56:36.8640 0.8381 30 TRH015 7.79 36.69 4760 1177\r\n 2010 04 11 00:56:37.6940 0.83 30 TRH015 7.74 36.69 4755 1177\r\n 2010 04 11 00:56:39.9607 2.267 91 \x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x0 0\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x0 0\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x0 0\n 2010 04 11 01:00:58.2916 258.3 17 \r Sensor TRH015\n 2010 04 11 01:00:58.3121 0.02053 29 \rcalibration coefficients:\r\n 2010 04 11 01:00:58.3619 0.04973 21 Ta0 = -4.076641E+1\r\n 2010 04 11 01:00:58.4016 0.03978 21 Ta1 = 1.031582E-2\r\n 2010 04 11 01:00:58.4489 0.04731 21 Ta2 = -2.394625E-8\r\n 2010 04 11 01:00:58.4907 0.04176 21 Ha0 = -1.160299E+1\r\n 2010 04 11 01:00:58.5318 0.04105 21 Ha1 = 4.441538E-2\r\n 2010 04 11 01:00:58.5752 0.04345 21 Ha2 = -3.520633E-6\r\n 2010 04 11 01:00:58.6174 0.04219 21 Ha3 = 4.330620E-2\r\n 2010 04 11 01:00:58.6595 0.04213 21 Ha4 = 6.087095E-5\r\n 2010 04 11 01:00:59.3678 0.7083 24 \x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\n 2010 04 11 01:02:12.1760 72.81 17 \r Sensor TRH015\n 2010 04 11 01:02:12.1931 0.01712 29 \rcalibration coefficients:\r\n 2010 04 11 01:02:12.2448 0.05173 21 Ta0 = -4.076641E+1\r\n 2010 04 11 01:02:12.2831 0.03822 21 Ta1 = 1.031582E-2\r\n 2010 04 11 01:02:12.3282 0.04517 21 Ta2 = -2.394625E-8\r\n 2010 04 11 01:02:12.3694 0.04114 21 Ha0 = -1.160299E+1\r\n 2010 04 11 01:02:12.4133 0.0439 21 Ha1 = 4.441538E-2\r\n 2010 04 11 01:02:12.4565 0.04324 21 Ha2 = -3.520633E-6\r\n 2010 04 11 01:02:12.4920 0.03553 21 Ha3 = 4.330620E-2\r\n 2010 04 11 01:02:12.5381 0.04609 21 Ha4 = 6.087095E-5\r\n 2010 04 11 01:02:12.8732 0.335 30 TRH015 7.65 37.52 4746 1200\r\n 2010 04 11 01:02:13.7034 0.8302 30 TRH015 7.59 37.47 4740 1199\r\n 2010 04 11 01:02:14.5444 0.841 30 TRH015 7.59 37.44 4740 1198\r\n

The TRH at 30m is acting up. Here is the data when it goes bad:

data_dump -i 1,420 -A manitou_20100314_120000.dat | more

2010 03 14 13:17:07.8073 0.82 31 TRH013 -4.18 88.21 3565 2887\r\n

2010 03 14 13:17:08.6281 0.8208 31 TRH013 -4.17 88.21 3566 2887\r\n

2010 03 14 13:17:09.4474 0.8194 31 TRH013 -4.19 88.21 3564 2887\r\n

2010 03 14 13:17:10.2680 0.8206 31 TRH013 -4.17 88.21 3566 2887\r\n

2010 03 14 13:17:11.0874 0.8194 31 TRH013 -4.17 88.19 3566 2886\r\n

2010 03 14 13:17:11.6405 0.5531 32 TRH013 560.79 -10099.38 -1 -1\r\n

2010 03 14 13:17:12.1912 0.5508 33 TRH013 560.79 -860.91 -1 29695\r\n

2010 03 14 13:17:12.7506 0.5594 33 TRH013 560.79 -728.27 -1 28671\r\n

2010 03 14 13:17:13.3080 0.5573 33 TRH013 560.79 -602.91 -1 27647\r\n

2010 03 14 13:17:13.8614 0.5534 33 TRH013 560.79 -602.91 -1 27647\r\n

2010 03 14 13:17:14.4180 0.5565 33 TRH013 560.79 -484.86 -1 26623\r\n

And when it comes back:

2010 03 14 13:25:23.2688 0.5591 33 TRH013 560.79 -374.10 -1 25599\r\n

2010 03 14 13:25:23.8211 0.5522 33 TRH013 560.79 -484.86 -1 26623\r\n

2010 03 14 13:25:24.3795 0.5584 33 TRH013 560.79 -602.91 -1 27647\r\n

2010 03 14 13:25:24.9310 0.5516 33 TRH013 560.79 -602.91 -1 27647\r\n

2010 03 14 13:25:25.6989 0.7679 35 TRH013 -4.18 -1418.74 3565 27647\r\n

2010 03 14 13:25:26.5223 0.8234 31 TRH013 -4.17 88.04 3566 2880\r\n

2010 03 14 13:25:27.3417 0.8193 31 TRH013 -4.17 88.06 3566 2881\r\n

2010 03 14 13:25:28.1604 0.8188 31 TRH013 -4.17 88.04 3566 2880\r\n

Note that the delta-Ts when it is working correctly are around 0.82 secs and when it goes

nuts are around 0.55 sec. Steve thinks it may be a PIC processor issue.

Ned Patton, Sylvain Dupont, Gordon Maclean

March 12, 2010, 9:15 MST arrived at tower

Plan: correct problem of spotty CNR1 data, replace TRH fan at 2 meters

rserial on port 19 showed no CNR1 data. Power cycled CNR1 by

unplugging/replugging. No data.

Switched CNR1 cable to port 5 (2 meter TRH's port). CNR1 works on that port.

Changed config to use unused port 14 as the CNR1 port. CNR1 also works

on that port.

Power on port 19 looked OK, and TRH worked on that port.

9:27 MST: new fan in 2 meter TRH.

Ned determined that the 7 meter TRH fan was noisy, so we replaced

that too.

System voltage, as measured by the DVM in the serial test box, fluctuates

from 11.8 to 13.2 volts, with a period of something like 10 seconds.

I assume that is due to the trickle charger.

At one point I saw the CNR1 data pause for about 20 seconds, and then

several records came out at once. That was after it had been running

for a while - not at startup.

Off tower at 10:30. Left site after a tour up the walkup tower and

chem seatainer.

On Feb 4, logged into system from Boulder to verify the serial numbers of the licors:

port |

SN |

assumed height |

|---|---|---|

2 |

1163 |

2m. Moved from port 14, 30m on Feb 2 |

7 |

1166 |

7m |

20 (was 17) |

1167 |

16m |

14 |

now disconnected |

|

11 |

1164 |

43m |

This agrees with the configuration previous to Feb 2, and the changes of Feb 2: move of the 30m unit (SN1163) to 2m, and the move of the cable from the 16m unit (SN1167) from port 17 to port 20 on the new data system box.

To check the licor serial numbers do:

adn # shut down data process minicom ttySN # N is port number ctrl-a f # sends break (Outputs (RS232 (EOL "0A0D"))) # enable carriage return in output (Coef ?) # display serial number (Outputs (RS232 (EOL "0A"))) # new-line termination, resume output ctrl-a q # quit minicom aup # restart data process ds # wait 10-15 seconds ctrl-c # verify sampling rates

Feb 2, 2010: Patton, Golubieski, Maclean on site at 10:30 MST

Planned tasks:

- Move Licor 7500 from 30 meters to 2 meters

- Investigate why data system can't sample 4 7500's at 20 Hz

- Measure guy tensions

Noticed a high-pitch sound from a TRH fan. Chris determined it was the 16m TRH. The propeller had come off the shaft, and was not repairable. Swapped the 16m and 2m TRHs, so that it will be easy for someone to replace the low unit.

Therefore the TRH at 16m has not had fan ventilation for an unknown period of time up to Feb 2, and likewise for the 2m TRH after Feb 2, until it is replaced.

Initial licor 7500 serial port configuration:

port |

height |

status |

|---|---|---|

2 |

2m |

not connected |

7 |

7m |

OK, 9600 baud, 10Hz |

17 |

16m |

OK, 9600 baud, 10Hz |

14 |

30m |

OK, 9600 baud, 10Hz |

11 |

43m |

OK, 9600 baud, 10Hz |

Looked at RS232 transmit signal from Licors with oscilloscope and a serial breakout box. Ports 17,11 and 7 all showed clean, square signals, ranging from -7V to +7V.

Moved 30m licor to 2m.

Looked at the signal ground of the 2m licor inside the Licor box, with the oscilloscope probe ground connected to power ground. Saw hair-like spikes with a range of +- 1V at the bit frequency of the signal. By shorting signal ground to power ground we could get rid of these spikes.

Temporarily switched the cables on the 7m and 2m Licors, so that the 2m was plugged into port 7. Port 7 is on a Diamond Emerald serial card, which are the ports (5-20) that have not been working at 20Hz.

Up'd the sampling frequency of the 2m licor in port 7 to 20Hz, 19200 baud. Saw an increase in "spurious interrupts" which did not diminish when we shorted the signal and power grounds together, so those spikes do not seem to be the issue.

Swapped dsm chassis boxes, replacing box #1 with box #8. Also swapped PC104 stacks. This configuration did not work well at all, with data_stats showing bad data rates. Swapped original PC104 stack back, and still saw problem. Eventually noticed that the input from the licor on port 17 had lots of jibberish. Moved this to port 20 and it cleaned up and the data_stats looked OK. So now believe this sampling issue was due to a bad port 17 on the new box and not an issue with the pc104 stack.

The PC104 stack (which is what has been at the site all along):

card |

SN |

|---|---|

viper |

#7, 3284 |

serial ports 5-12, EMM-8M |

W250017 |

serial ports 13-20, EMM-8M |

W237191 |

power |

W248060 |

This stack is now in box #8, which means it has a new Viasala barometer. rserial shows this is unit 9: B9 752.08 5.2\r\n. The barometer in box#1 was unit B7.

Tried increasing the sampling rates of the licors to 20 Hz, 19200 baud. The number of spurious interrupts increased to somewhere around 600+. The system was generally keeping up, as shown by data_stats, but sometimes things looked bad. So reduced the rates back to 10Hz, 9600 baud. The system runs well, with a background level of around 170 spurious interrupts/sec, as it was before this visit.

The licor message length is between 44 and 49 characters. So at 20Hz this is roughly 10bits * 49 * 20/sec = 9800 bits/sec, so we must use 19200 baud instead of 9600 when sampling at 20 Hz.

Around mid-day Ned and Chris measured the guy tensions and those values have been added to the matrix in the blog entry.

Conclusions

The problem with sampling Licors at 20Hz seems to be a data system issue, in that it cannot keep up with the total serial interrupt load from the sensors on the tower. Based on what we saw on the scope, it is not an issue with the quality of RS232 signals. Since it didn't change when we swapped system enclosures and the PC104 stacks, it is not related to interface panels, the CPU or the serial cards. The power to the Licors looks good.

Our simulation of this configuration in the lab did not include 5 TRHs (1 sample/sec), the barometer (1/sec) or the GPS (2 samples/sec). The addition of these inputs seems to break the camel's back.

The 2m licor connected to /dev/ttyS2 was removed today, and the licors at 16 and 43 meters were reconnected to ports /dev/ttyS17 and /dev/ttyS11 by a BEACHON helper.

By checking the serial numbers, with the (Coef ?) command, and comparing against the earlier LICOR log entry, we could determine remotely what unit is connected to what port. Note that the licors at 16 and 43 meters are switched from the previous configuration.

port |

height |

SN |

|---|---|---|

ttyS2 |

removed |

|

ttyS7 |

7m |

1166 |

ttyS11 |

43m |

1164 |

ttyS14 |

30m |

1163 |

ttyS17 |

16m |

1167 |

The data system from the time the licors on port 11 and 17 were enabled on Dec 2, until the next morning, Dec 3 at 10:26 AM MST was configured in the old way, so the data from the licor on port 17, SN 1167 was given an id of 1,310, and the data from the licor on port 11, SN 1164 was given an id of 1,510. So the licor data at 43 meters and 16 meters is cross-identified during that time period.

Basic 7500 configuration:

(Outputs (RS232 (EOL "0A") (Labels FALSE) (DiagRec FALSE) (Ndx FALSE) (Aux FALSE) (Cooler FALSE) (CO2Raw TRUE) (CO2D TRUE) (H2ORaw TRUE) (H2OD TRUE) (Temp TRUE) (Pres TRUE) (DiagVal TRUE)))

First test: 4 licors running at 10Hz, 19200 baud

(Outputs (BW 10) (RS232 (Freq 10.0) (Baud 19200)))

This resulted in around 1200 spurious interrupts being reported in /var/log/isfs/kernel

Second test: 4 licors running at 5 Hz, 19200 baud

(Outputs (BW 5) (RS232 (Freq 5.0) (Baud 19200)))

This resulted in around 300 spurious interrupts/sec being reported in /var/log/isfs/kernel

Third test: 4 licors running at 5 Hz, 9600 baud

(Outputs (BW 5) (RS232 (Freq 5.0) (Baud 9600)))

This resulted in usually less than 50 spurious interrupts/sec being reported in /var/log/isfs/kernel. The kernel does not report more than 50/s, so there are very few reports of spurious interrupts showing up in /var/log/isfs/kernel.

Left it running at this setting.

Current values from /proc/tty/driver/serial:

2: uart:XScale mmio:0x40700000 irq:13 tx:543 rx:1136277465 fe:1947 RTS|DTR 7: uart:ST16654 port:F1000110 irq:104 tx:1111 rx:1153977325 fe:41917 RTS|DTR 11: uart:ST16654 port:F1000130 irq:104 tx:1585 rx:3762900 fe:21542 RTS|DTR 14: uart:ST16654 port:F1000148 irq:104 tx:1549 rx:575482383 fe:3996 RTS|DTR 17: uart:ST16654 port:F1000160 irq:104 tx:1079 rx:2059346 fe:2312 RTS|DTR

Next morning, tried fourth test:

Fourth test: 4 licors running at 10 Hz, 9600 baud

(Outputs (BW 10) (RS232 (Freq 10.0) (Baud 9600)))

This resulted in around 400-500 spurious interrupts/sec being reported in /var/log/isfs/kernel. The system is keeping up, top shows an idle value of ~ 70%.

Here are the current values from /proc/tty/driver/serial. The number of framing errors is staying steady at this baud rate:

7: uart:ST16654 port:F1000110 irq:104 tx:1371 rx:1169013368 fe:41917 RTS|DTR 11: uart:ST16654 port:F1000130 irq:104 tx:2133 rx:18900757 fe:21542 RTS|DTR 14: uart:ST16654 port:F1000148 irq:104 tx:1809 rx:590564461 fe:3997 RTS|DTR 17: uart:ST16654 port:F1000160 irq:104 tx:1339 rx:17587617 fe:2321 RTS|DTR

Testing at FLAB

We've attempted to simulate the turbulence tower data acquisition configuration at FLAB, with an adam sampling 5 CSAT3 sonics at 20Hz and 1 Licor 7500 whose transmit and signal ground lines are forked to 5 separate serial ports. There is no GPS, CNR1, barometer or 5 TRHs on the test adam. We see no spurious interrupts, and the data system is keeping up with the 1x5 Licor set to 20Hz, 19200 baud. top shows a system idle value between 97 and 80%.

top on the adam at the turbulence tower shows 65-79 % idle. Other than the additional low rate serial sensors there is no difference between the 2 systems. They are both writing the full archive to a usbdisk.

18Nov09 Site Visit: CG, KK, JM

- Purpose: Install 5 Licor 7500's

- Power: We rearranged the power / batteries to accomodate the licors. A high current/trickle/equalize battery charger was connected directly to the battery chain and not through the solar/battery charge by using the last 'external battery' amp connector. This was because an excessive feedback impedance from the Morningstar charge controller was causing the commercial battery charger to not see the proper battery voltage preventing it from starting up to supply the loads (ie battery charger was 'too smart'). A regulated, current limiting supply wouldn't care and could be cranked up to a voltage more closely approximating solar panels (ie 14-15vdc). There are now 3 batteries in the string. A power distribution Tee was connected to the 'fused load' of the primary battery box with one secondary going to the Adam and the other going to the 5 Licor chain. The licors are being powered directly because earlier tests showed that the voltage drop in our smaller gauge isfs serial cables was excessive and causing the higher-level licors to not have adequate supply voltage. The internal solar-battery-charger is being used only as a 'low-voltage-disconnect' switch for these 2 loads.

- Licors Installed. The 43m,30m serial cables had been added before, so we only installed those for 2.5,7.5,15m

Level |

Licor S/N |

Adam ttySx |

Freq,BPS |

Comments |

|---|---|---|---|---|

43m |

1164 |

17 |

10,9600 |

Disconnected from panel, diamond board #2 |

30m |

1163 |

14 |

10,9600 |

Refurbished recently |

15m |

1167 |

11 |

10,9600 |

Disconnected from panel, diamond board #1 |

7.5m |

1166 |

7 |

20,19.2k |

|

2.5m |

0813 |

2 |

20,19.2k |

Refurbished recently, On Viper board |

- Licor Ingest Problems: After installing the licors we observed many 'spurious interrupts' on the adam console. These were caused by the licors, especially at 43, and 15m, but those were not the only ones. At first we thought the cables may have been 'funky' and tried removing some cable ties to no avail. When we disconnected the worst offenders, the adam was able to do a data_stats and the statistics for the licors looked ok, as did the console rserial printouts. However the diamonds/adam was having clear difficulty. Gordon was able to login to the system and reprogram a few of the sensors to try to slow them down to overcome these interrupts, and although at first it appeared that worked, it did not. .... Pow-wow time. I'm suspecting a power/serial ground issue combined with the serial driver circuit on the licors, although the diamond boards may be culpable. It is noteworthy that there are 2 different diamond boards involved in these. We left the system running but with the 15 and 43m licors disconnected.

Here are my notes from this week on the first servicing we've ever done on our Li7500s.

Note that S/N 0813 and 1166 came back from LiCor this summer after being repaired (from lightning damage), so no service was performed on them.

The following all was done using LiCor's software (installed on the Aspire). Before service:

S/N |

Last Cal |

CO2 zero |

CO2 span |

H2O zero |

H2O span |

AGC |

|---|---|---|---|---|---|---|

0813 |

Didn't read |

|

|

|

|

|

1163 |

24 Oct 06 |

0.8697 |

0.9968 |

0.8468 |

0.9946 |

49% |

1164 |

24 Oct 06 |

0.8883 |

1.017 |

0.8601 |

0.9980 |

50% |

1166 |

10 Jul 09 |

0.8896 |

1.0051 |

0.8583 |

0.9969 |

53% |

1167 |

24 Oct 06 |

0.8768 |

0.9978 |

0.8678 |

0.9961 |

48% |

On the afternoon of 10 Nov 09 (after Chris&Jen recovered them from MFO), I dumped the dessicant/scrubber (Magnesium Perchlorate; Ascarite2) from the bottles of 1163, 1164, 1167 and replaced with new Drierite and Ascarite2, following the manual instructions. I put a sticky label on one bottle in each sensor with this replacement date. Instructions say to have the sensors run for at least 4 hours before proceeding with calibrations, so they were all left overnight powered on.

On the afternoon of 11 Nov (~23 hours after changing chemicals), we went through the calibration procedure using a cal lab nitrogen tank for zero CO2 and H2O and a tank I borrowed from Teresa Campos/Cliff Heizer at 404.316ppmV for span CO2. Both of these were supplied to the 7500 using the LiCor calibration tube at a flow rate of about 1.3 lpm. Span H2O was achieved by placing the entire 7500 head in the Thunder chamber at T=25C, RH=80%, which should be a dewpoint of 21.31C. Since the electronics box was outside the chamber, the calibration tube was also placed in the chamber (though not in the optical path) to measure pressure. Since it seemed to take a long time (25 & 50 min, respectively to calibrate 1167 and 1163), we used the calibration tube's temperature reading when we calibrated 1164 the next day. This sped things up a bit. The calibration in the Thunder took long enough that 1164 was done the next morning. The new readings were:

SN |

Cal Date |

CO2 zero |

CO2 span |

H2O zero |

H2O span |

|---|---|---|---|---|---|

1163 |

11 Nov 09 |

0.8709 |

1.0082 |

0.8510 |

1.0229 |

1164 |

12 Nov 09 |

0.8902 |

1.0233 |

0.8635 |

1.0008 |

1167 |

11 Nov 09 |

0.8770 |

1.0207 |

0.8732 |

0.9986 |

AGC values were not logged, but noted to be within 1% of the earlier readings (even with the tube on).

With one exception, these values are higher than before service, indicating more absorption now. I note that I didn't clean the optics prior to this calibration, which probably should have been done, but spot checks of a few of the sensors looked pretty good.

Chris also ran 0813 and 1166 into the chamber (with a dewpoint still at 21.31C) and found that these sensors showed dewpoints of 22.07C and 21.45C, respectively. I'm a tiny bit concerned about this value from 0813.

Note that the LiCor software changed the instrument settings. They will be reset before (re)deployment at MFO.

Nov 9, 12:30 MST.

Per Steve Oncley, increased the sampling rate of the Licor 7500 at 43 meters.

It is the only unit connected right now.

Commands to change the sampling rate:

# shut down data process adn # adn powers off port 17, power it back on. eio 17 1 # start minicom # The default baud rate of minicom on that port is 9600, which is what the 7500 # uses after it is sent a break. To set it to 9600 explicitly, use ctrl-A pe. # Use ctrl-A minicom commands to turn on local echo (e), line wrap (w) and send a break(f). # Set bandwidth to 20 and RS232 output frequency to 20. # Set terminator to 0A (newline): minicom ttyS17 ctrl-A pe ctrl-A e ctrl-A w ctrl-A f (Outputs (BW 20) (RS232 (Freq 20.0))) ctrl-A f (Outputs (RS232 (EOL "0A"))) ctrl-A f (Outputs ?) ctrl-A q # minicom should now be finished # power off port 17 eio 17 0 # start data process. This will power up port 17 aup # wait a bit, then ds ctrl-C # If the 7500 doesn't respond, try power cycling it: eio 17 0 eio 17 1

On site: Golubieski, Semmer, Militzer, Maclean. Dave Gochis was

also at the tower site, installing soil sensors.

Installed splint at ding in tower upright.

Reinstalled TRH transducers in ventilation units at all levels.

Started installing Licor 7500's. At 43 meters, S/N 1167, port ttyS17.

Measured total power draw at data system, prior to and after unit 1167

was installed:

|

|

|

Power |

Add'l Power |

|---|---|---|---|---|

without 43m 7500 |

13.0 V |

1.4 A |

18.2 W |

|

with 43m 7500 |

12.6 V |

3.0 A |

37.8 W |

19.6 W |

settled to |

12.8 V |

2.3 A |

29.4 W |

11.2 W |

Measured power draw of the 43 meter 7500 only, with serial line power meter at

the adam:

43 meter 7500 |

12.4 V |

.87 A |

10.8 Watts |

|---|

Tried to prepare units 813 and 1163 for installation at 30 meters, but could not get them to boot and run with required length of cable. Red LED at circuit board would stay on, or blink on and off. It is supposed to go off once the unit is running. Measured 8 V at the end of 30 meter cable with unit attached.

Installed S/N 1163, and 1164 at 30 and 16 meters, but witout data/power cables.

level |

Licor 7500 S/N |

status |

|---|---|---|

43m |

1167 |

port 17, working |

30m |

1163 |

not cabled |

16m |

1164 |

not cabled |

7m |

|

|

2m |

|

|

DC power supply in the enclosure that is charging the batteries is rated at 3 A, which will not be large enough to drive system with 5 Licor 7500s, since each Licor needs about 0.9 A.

The beacon was running off a separately charged battery with an inverter. Connected the beacon directly to AC power and added the battery to the bank of 2 that power the data system and tower sensors. So the beacon is not a load on the DC supply anymore, and there are 3 batteries providing power to the tower, that are charged by the DC supply via a charge controller.

Installed Kipp and Zonen CNR1 net radiometer. Data is being received. Distance from top of CNR1 boom to top of tower base plate is 22.38 meters. Chris said that per the bubble level indicator the unit is quite level.

Distance from top of CNR1 boom to top of 16 meter sonic is 6.31 meters. So the top of this sonic boom is at 22.38-6.31 = 16.03 meters.

Moved network switch from adam to power enclosure. Dave Gochis will probably use one of the ethernet ports for his Tsoil CR1000. After this switch, the fiber/copper media converter would not connect - even after several power cycles of converter and switch. Had to power cycle the media converter in the seatainer. This may mean that the fiber network will not always come up after a power outage without intervention.

Untaped pressure inlet (13:33 MST).

Updated NIDAS software on adam to version 5051M.

Installed anti-climb cage on tower.