installed TNW01

20 m ARL RMY 81000 s/n 1359

10 m ARL RMY 81000 s/n 645

2 m ARL RMY 81000 s/n 712

mote # 55

PTB220 s/n U4110003

DSM power panel fried upon hookup, power was ok at cable end before attaching, but the smoke was let out at power up.

will install spare DSM tomorrow?

added 2nd battery at TSE 02 and grounded DSM

TNW 11 installed 14 v P/S

RiNE 07 swapped port 4 and 6 (NRO1, and EC150), data streams now look OK

verified that Tr NW 06 and Tr NW 05 towers are installed - 05 is a real bugger to get to.

Attempted to power up V04 but power run is about 42 meters, and would exhaust our supply of long power cables, so I am making a cable out of the zip cord and will attempt to install tomorrow.

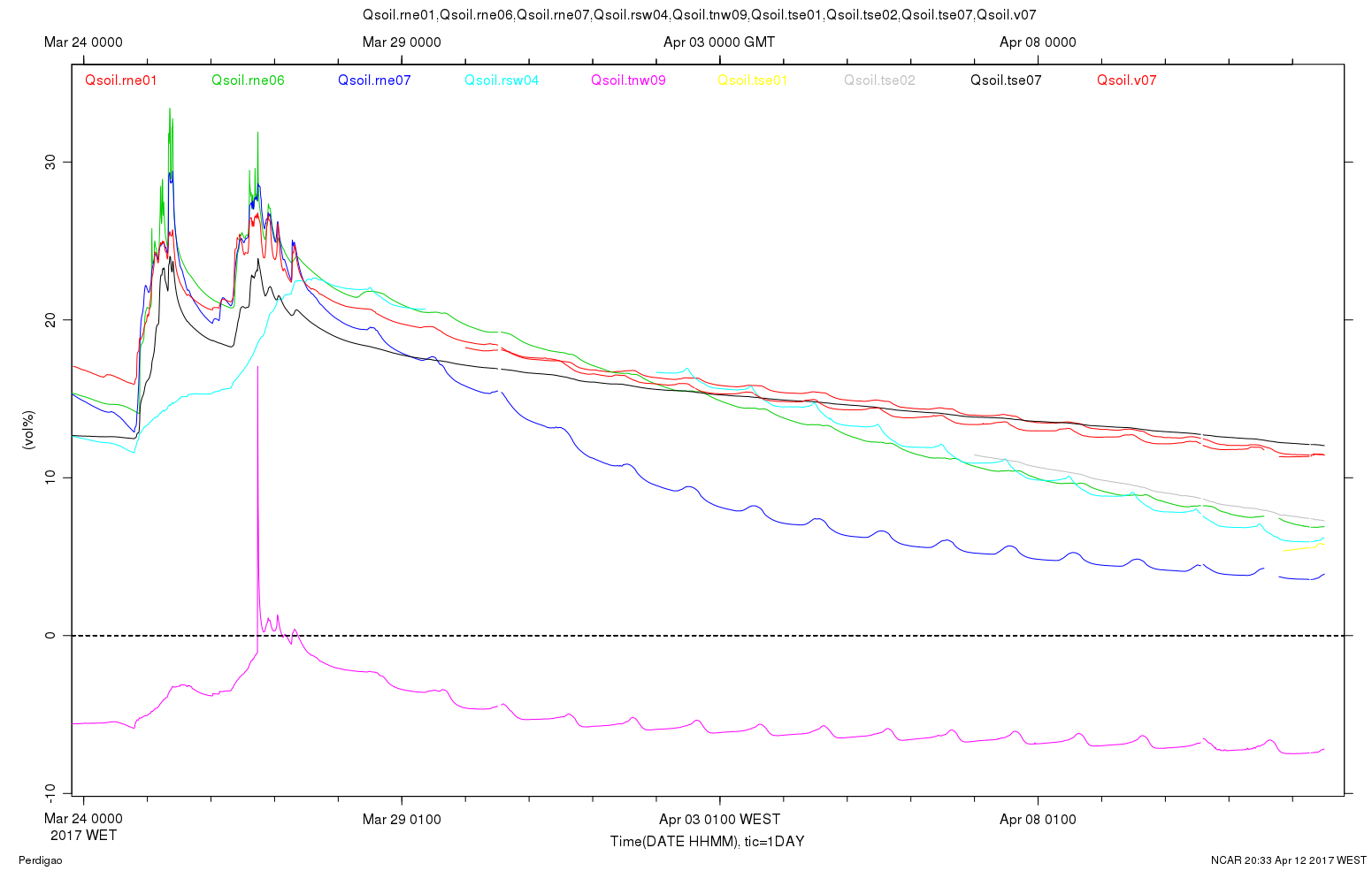

At Gary's inquiry, I have plotted Qsoil from tnw09 for the last 20 days. This sensor has read mostly negative values consistently, but clearly is responding, for example to a precip event 17 days ago. We should check the sensor cable connection at this site. (All of the Wisard boards are plugged directly into the mote boxes, so this connector is easily accessible.) Qsoils should be the red Wisard board.

In any case, we should take regular soil cores at this site (of course, we should take them at every site), to be able to create an in-situ calibration.

Just playing in minicom, I get "sort of" the correct data from this Licor using 19200baud 7-E-1. This tells me that the baud rate is correct and the instrument is trying to report. I would suspect a bad ground connection, either in the cable (not too likely) or (much more likely) in the screw terminal cable terminations inside the Li7500 electronics box.

We set up station TrSE01 today, long and hard. Dan spent about 6.5+ hrs on the tower with NO complaints - what a trooper!

Spent lots of time trying to get the 10m sonic (csat3a) on line.

Very frustrating. The sonic was working well in the ops center this morning, but refused to cooperate once on the tower.

Tried multiple grounding techniques, isolation, etc. to no avail.

Will have to revisit later.

We may need to have more power cable sent over to accommodate solar panel and power pole to station runs, will assess tomorrow.

Power is down at the ops center, so we don't know if TrSE01 is reporting.

No other sites visited today.

got valley 3 instrumented today, and after replacing the 2 meter CSAT3A head got all the instruments working. Original head gave only NAN's. Waiting on INEGI to supply batteries to power up the station.

replaced the cable at TNW 07 20 meter TRH, and replaced the 12v P/S with a 14 volt unit. (60 meter TRH now working on its own)

Having issues with Valley 07 20 meter Licor and CSAT3A, will explore other avenues tomorrow hopefully.

Questions about needing serial #'s for TNW 07 - all NCAR sensors there are TRH's, and they report their S/N's - do we need the ID's from the RMY sonics that are the only other sensors on the tower?

Power pole and drop for Valley 04 is about 35 yds from tower, will attempt to run DC cable, but am concerned about IR drop for that distance, will use 14 v P/S to compensate, but that takes another 14 v supply from other needy stations......

only 2 more towers to instrument until user sensors arrive.

These are PC104 DSMs (I can't remember which are Titans and which Vipers) which have not been online even though their ubiquiti radios are online. I visited each to see what I could figure out.

rsw02

My first realization was that I didn't have a console cable for these DSMs, so I didn't try to log into this one. I did notice that the ethernet ribbon cable was disconnected from the interface panel, but I wasn't sure of the orientation of the plug. I rebooted it anyway and left to check on the plugs in the other DSM. At rsw05 I saw that the ribbon cable gets plugged in with ribbon exiting away from the RJ45 jack:

So that's how I plugged it back in at rsw02 on the way back.

Nagios on ustar reported that rsw02 then started responding to pings, for another few hours, until I tried to run an ansible playbook on it from ustar, and now it has not responded since. I should have tried to log into it first to see whether anything else was working, but I didn't.

rsw05

Rebooted, just to start from scratch, but maybe not necessary. The ethernet ribbon cable is plugged in and I see lights, so I can't see anything obviously wrong.

So I disconnected the ubiquiti at the bulgin port and connected my laptop to the internal RJ45 jack. Ssh works, I can log into the DSM. The IP address is correct: 192.168.1.173, GPS is locked and chrony in sync, usb is at 50%.

No data from the METEK sonic.

Next I disconnected the ethernet ribbon cable, plugged ubnt radio back in, with my laptop still plugged into the internal RJ45 jack. I cannot ping or browse to rsw05u, but I know it's up because it has been pingable for the last week.

So should it be possible to connect to the ubiquiti through the internal RJ45 jack? If so, does that mean there is a problem with the ethernet connections on the interface panel?

Next steps

I'm considering what to try next:

Log into the DSM with the console cable and investigate from there. Ping the radio, look for log messages about errors in the ethernet device.

I have one of the RJ45 jacks which plug directly into the CPU card for either the Titan or Viper. I could at least isolate the ribbon cable with a patch cable between the CPU jack and the RJ45 jack on the interface panel, but I don't think that works for both Titans and Vipers.

Work on a scheme to isolate the interface panel ethernet port. My best guesses:

- Use a cable with a bulgin female plug and RJ45 plug to convert the Ubiquiti cable to RJ45 end, then plug that into a PoE injector which gets power from somewhere, either by tapping the power panel or the external bulgin ethernet port. If the internal RJ45 works to connect to the CPU, then that can be used to patch the CPU into a switch, also powered off the power panel.

- Replace the bulgin cable entirely and run an ethernet cable up the tower same as the Pi towers. Use the same PoE injector, but it might need a different power plug. Connect that to the internal RJ45 jack same as above.

- If there is a problem between the internal RJ45 and CPU card, then I don't know what you do. Can we attach the ribbon cable to a new RJ45 jack? Use a USB ethernet interface instead of the onboard interface?

- Just replace the entire interface panel. If we don't have spares here, take one from VERTEX. Or replace the entire DSM with one from VERTEX, but keep the CPU stack.

TSE 02 was instrumented yesterday, some issues with the network but we may move an antenna soon.

The mote was not reporting but found the problem rather quickly. Measured for voltage - 0 volts. Turns out that the cable was chewed in two (gray PVC cable, not the standard green serial cable)

replaced power supplies at V 06 and V 07 with 14 volt supplies

installed anti twist plate on 2m sonic at V 07 and got the DSM's on line again, but port 3 is not working (Licor). Possibly the fuse. Will look at later.

V06 has intermittent NAN's for the 20 m sonic.

installed USB stick at V 06 (was borrowed for another station back in Feb.)

Today (4/7) instrumented RSW 08, all data coming in, but voltage is low at DSM (10.9 volts), should be fine. Explored and found 2 towers which were erected, but not according to the spreadsheet. Will instrument those soon.

April 6th setup notes: Gary, Dan and I arrived late yesterday. Wednesday we rearranged the ops center and attempted to find all the sensors and equipment left in the beginning of Feb. with some success, and some failures (we can't seem to locate at least one Metek sonic, and the PTB 220 pressure sensors as of yet).

We set up station V06 this afternoon, but are missing a USB memory stick (forgot that stick was "borrowed" for another station back in Feb). Will attempt to bring that station up tomorrow.

tnw07b is responding to pings but ssh connections are reset. Oops, I made a mistake, nmap does look like a DSM. (Port 22 is ssh, 8888 is tinyproxy, 30000 is dsm. Xinetd check-mk 6556 is not listed I suspect because nmap does not scan it by default.)

[daq@ustar raw_data]$ nmap tnw07b

Starting Nmap 7.40 ( https://nmap.org ) at 2017-03-10 17:40 WET

Nmap scan report for tnw07b (192.168.1.146)

Host is up (0.055s latency).

Not shown: 997 closed ports

PORT STATE SERVICE

22/tcp open ssh

8888/tcp open sun-answerbook

30000/tcp open ndmps

And data are coming in:

tnw07b:/dev/gps_pty0 7 2 32 2017 03 10 17:41:25.874 03 10 17:41:41.036 2.04 0.142 0.925 69 80

tnw07b:/dev/ttyUSB0 7 22 16 2017 03 10 17:41:25.828 03 10 17:41:40.958 0.99 1.008 1.009 39 39

tnw07b:/dev/ttyUSB4 7 102 16 2017 03 10 17:41:26.368 03 10 17:41:41.434 1.00 1.004 1.005 38 38

tnw07b:/dev/ttyUSB7 7 32768 4 2017 03 10 17:41:26.049 03 10 17:41:41.049 0.20 4.772 5.228 17 30

The data connection to dsm_server is in fact from the right IP address, so tnw07b appears to be configured correctly:

[root@ustar daq]# netstat -ap | grep tnw07b

tcp 0 0 ustar:51968 tnw07b:43666 ESTABLISHED 9440/dsm_server

According to nagios, tnw07b was responding to check-mk requests until 2017-03-09 10:52 UTC, so something happened then which now causes network connections to be reset. Probably this system needs to be rebooted.

Steve noticed that ports ttyS11 and ttyS12 are no longer reporting any data on rsw04. After getting rsw04 updated and clearing off the USB yesterday and restarting DSM, those ports are still not reporting. They were working until Feb 25. ttyS10 was out for a while also, but it came back this morning at 2017 03 10 12:16:47.339, before the reboot.

[daq@ustar raw_data]$ data_stats rsw04_20170[23]*.dat

2017-03-10,15:58:41|NOTICE|parsing: /home/daq/isfs/projects/Perdigao/ISFS/config/perdigao.xml

Exception: EOFException: rsw04_20170310_155028.dat: open: EOF

sensor dsm sampid nsamps |------- start -------| |------ end -----| rate minMaxDT(sec) minMaxLen

rsw04:/dev/gps_pty0 35 10 3944084 2017 02 03 09:44:08.995 03 10 15:58:31.569 1.29 0.015 1090606.000 51 73

rsw04:/var/log/chrony/tracking.log 35 15 133438 2017 02 03 09:44:53.133 03 10 15:58:25.024 0.04 0.000 1090616.750 100 100

rsw04:/dev/ttyS11 35 100 38021353 2017 02 03 09:44:08.517 02 25 09:48:48.136 20.00 -0.016 0.992 60 77

rsw04:/dev/ttyS12 35 102 38021782 2017 02 03 09:44:12.831 02 25 09:48:48.206 20.00 -0.107 1.390 40 125

rsw04:/dev/dmmat_a2d0 35 208 39114544 2017 02 03 09:44:08.570 03 10 15:58:31.363 12.84 0.034 1090604.875 4 4

rsw04:/dev/ttyS10 35 32768 767733 2017 02 03 09:44:13.130 03 10 15:58:31.137 0.25 -0.031 1132080.875 12 104

Steve tried connecting to the ports directly yesterday and did not see anything. After the reboot, I still don't see anything either. This is a viper, so I'm thinking ports 11 and 12 are on the second emerald serial card, and these log messages are relevant:

[ 41.641774] emerald: NOTICE: version: v1.2-522

[ 41.842945] emerald: INFO: /dev/emerald0 at ioport 0x200 is an EMM=8

[ 41.871947] emerald: WARNING: /dev/emerald1: Emerald not responding at ioports[1]=0x240, val=0x8f

[ 41.881346] emerald: WARNING: /dev/emerald1: Emerald not responding at ioports[2]=0x2c0, val=0x8f

[ 41.890757] emerald: WARNING: /dev/emerald1: Emerald not responding at ioports[3]=0x300, val=0x8f

(I assume Preban also was there...)

Working very late (until at least 10:30pm), the DTU crew got all of our installed masts on the network, though a few DSMs didn't come up. We're very grateful!

From several Per emails:

5 Mar

The fiber for the internet is still not working, but José C. has promised that someone will come on Tuesday to have a look at it. I can see that the media converter reports an error on one the fibers.

We have brought a Litebeam 5 AC 23dBi with us and we have placed it on the antenna pole of the ops center. That has helped significantly on the performance and stability of link to the ridges. So I don’t think It’ll be necessary for you to manufacture any special brackets.

We have then placed the “old” litebeam from the ops center according to Teds plan at rNE_06. We have also placed the 19 dBi spare NanoBeam on RiNE_07 and reconfigured Tower 10 to match the new NanoBeam. So now we’re only lacking to replace the last of the 3 Prisms which I noticed was now mounted in tower 37. The Litebeam that Ted has ordered could maybe then replace that one?

We have gained some more bandwidth from the ops center to tower 29 by moving the frequencies further away from the ones being used by the two sector antennas at tower 29. It seemed like these three antennas close by each other were interfering.

As you already has discovered the fiber was fixed to day. It turned out that we had two issues with the connection out of here. Rx fiber was broken close to the first junction box they have. Aparently a couple of kilometers from here. The Tx fiber also had a problem with too sharp a bent in the very first electricity pole outside the building. The latter could explain the changing performance we were seeing on the line performance.

The last 100m tower was successfully instrumented today, and your DSM’s should with a little luck be visible on the network.

We have changed the Ubiquiti config in the 4 army alu towers behind riNE07. They should now be online.

A few of the ubiquities on the towers were not set up with the proper wireless security rules, some were locked on the MAC address of the old AP we replaced (the Prism) and the last one was set in the wrong network mode.

We have moved a few towers from the planned accesspoint to another were the signal quality was higher. I still miss to correct it on the spreadsheet, I’ll do that asap.

The ARL ubiquities were all having the wrong PSK. José C. forwarded me a mail from a Sean, where he says there’s an IP conflict in one of his units, but they all seemed to have the IP address stated to the far right in the spreadsheet. And not the .110 to .113 stated in the mail. I were not able to access the web config page as described in his mail either, but since the IP’s matched Ted’s spreadsheet I put them on the network.

This was reporting all NA. pio got it to work. I'm actually surprised, since I thought we had seen this problem in Jan and had even sent people up the tower to check the sonic head connection, with no success then...

Now that the network is up and we can look at things, I'm finding lots of TRHs with ifan=0:

tse06.2m: #67, no response to ^r, responded to pio (after power cycle, responds to ^r)

tse06.10m: #43, no response to ^r, pio didn't restart fan (after power cycle, responds to ^r)

tse06.60m: #8, responds to ^r and pio, but didn't restart fan

tse09b.2m: #103, ^r worked

tse11.2m: #120, no response to ^r, responded to pio

tse11.20m: #116, responds to ^r and pio, but didn't restart fan

tse11.40m: #110, responds to ^r and pio, but didn't restart fan

tse11.60m: #121, was in weird cal land, no response to ^r, responded to pio

tse13.2m: #119, no response to ^r, pio didn't restart fan (after power cycle, responds to ^r)

tse13.100m: #111, no response to ^r, pio didn't restart fan, reset CUR 200, now running at 167mA (and T dropped by 0.2 C). WATCH THIS! (has been running all day today)

tnw07.10m: #42, no response to ^r, responded to pio

tnw07.60m: #125, ^r killed totally! pio doesn't bring back. dead.

Josés Carlos and Palma today adjusted the PITCH of the ops center antenna, which immediately brought tse13 and rne06 online, and improved the connection to rne07. So, for the first time, we now have all links to the ne ridge in place and working. With these links up, we are also able to get to many of the sites in the valley and sw ridge – now 17 total stations. There are another 4 sites that should be on the network, but aren't connected. An additional 3 sites are on the network, but the data system won't connect. So...progress, but still some work to do.

We think we've figured out our problem with the RMYoung sonics – our power supplies are not producing high enough voltage. The RMY has a minimum voltage requirement of 12V and our power supplies are producing 12V. However, the voltage drop through as much as 55m of DC cable (from the fuse boxes on the power drop through the DSM to the sensor) has been 0.5V or more. tnw11, for example, was reporting Vdsm=11.2V. We note that the minimum voltage for all other sensors is below 10.5V, so this is a problem specific to the RMYs. This explanation is consistent with these sensors working in the ops center (and earlier, at ARL), but not on the tower.

The solution is to use higher-voltage power supplies for the (7) masts that have RMYs and AC power. (Solar-powered stations should have batteries providing nominally 12.7V.) We've ordered 14 V supplies that we will bring with us on the next trip. (They need to have our DC power connector added to them before use, which is easiest to do in the lab.)