Data Analysis Services Group - July 2012

News and Accomplishments

VAPOR Project

Project information is available at: http://www.vapor.ucar.edu

XD Vis Award:

Efforts on the integration of PIO with VDC continued, and we are now holding semi-weekly meetings to keep the project moving forward. A single issue remains: PIO consumes a tremendous amount of memory. For example, a 1024^3 problem (4GBs at 32bit precision) has a memory footprint of ~67GBs. The VAPOR team is working with John Dennis et al. to try to identify and correct the problem.

The VDC plugin reader, developed previously for ParaView, was extended to support VDC2 and the new internal VAPOR data model.

KISTI Award:

Work on adding support for missing data values to the VDC library was completed. The VDC data translation library can now correctly handle gridded data sets with missing data values. In the case of the KISTI award, this capability was needed for ocean grids that intersect land masses.

Work was also completed on extending all vaporgui visualizers to support "stretched" grids (computational grids with non uniform sampling along one or more axes).

Development:

- Ashish Dhital, a Siparcs intern from the U. of Wyoming has been working with Alan to provide keyframing capability for animation control in VAPOR. Ashish developed the spline interpolation of VAPOR viewpoints as well as implemented a reparameterization to enable control of camera speed. Alan wrote a user interface for this feature and connected it to Ashish’s spline interpolation code. Users can specify specific camera locations and the speed that the camera will be moving and VAPOR will interpolate these into as smooth curve that can be played and captured. This feature is almost complete and should be available in the next release of VAPOR.

- Yannick has been working on porting VAPOR to Mac OS 10.7, and updated the Qt library from version 4.6 to 5.0. Numerous changes in Qt 5.0 have made the port difficult. Our team develops for three major platforms (Unix, Linux, Windows). My workstation was recently upgraded to OS X Lion 10.7.4. After the upgrade the building of VAPOR was broken due to the removal of the 10.5 SDK by Apple, which was the default used to build VAPOR. VAPOR had to be updated to use the 10.6 SDK, after which it was revealed that our version of QT (4.6.1) was too old to have OS X Lion support. I took the latest stable version of QT (4.8.2), built it locally, and installed it to my local copy of the VAPOR third-party libraries. With this, VAPOR could successfully build on OS X Lion, but due to either changes in the QT library or OS X graphical bugs that we were not previously aware of started to show. Due to the nature of the QT integration figuring out where the errors are occurring is a difficult process, my current goal is to fix the graphical bugs to bring VAPOR back to parity with the release version and hopefully upgrade VAPOR's error reporting capabilities along the way so that another OS/library change does not cause such a cryptic error.

Education and Outreach:

John participated in the lab component of the annual WRF tutorial, helping students who were using VAPOR to explore their WRF outputs for the first time.

Software Research Projects

The internal LDRD proposal submitted by Kenny Gruchalla at NREL has been awarded. The VAPOR team are un-funded collaborators on this award, and will provide consulting to help NREL extend VAPOR's multiresolution data format to better support wind turbine array modeling.

ASD Support

- Alan has been working with Gabi Pfister on her ASD project, simulating the effect of climate change on atmospheric pollutants in North America. Alan met with Gabi and determined the derived variables that will be needed, and wrote Python scripts to generate these in VAPOR. Gabi will generate many terabytes of data during her planned ASD runs, so we are experimenting with the use of VAPOR compression to reduce the storage required for the data visualization.

- Alan is also providing visualization support for Baylor Fox-Kemper and Peter Hamlington’s ASD project that examines the turbulence in ocean water induced by contact with the atmosphere. The initial data that Peter Hamlington generated was not saved with sufficient temporal resolution to visualize the wave motion at the surface. Peter is currently saving the data for a smaller subregion at a 10-fold increase in temporal resolution.

- John was able to use the new stretched grid rendering capabilities (see KISTI above) to produce visualizations for NCAR's David Richter.

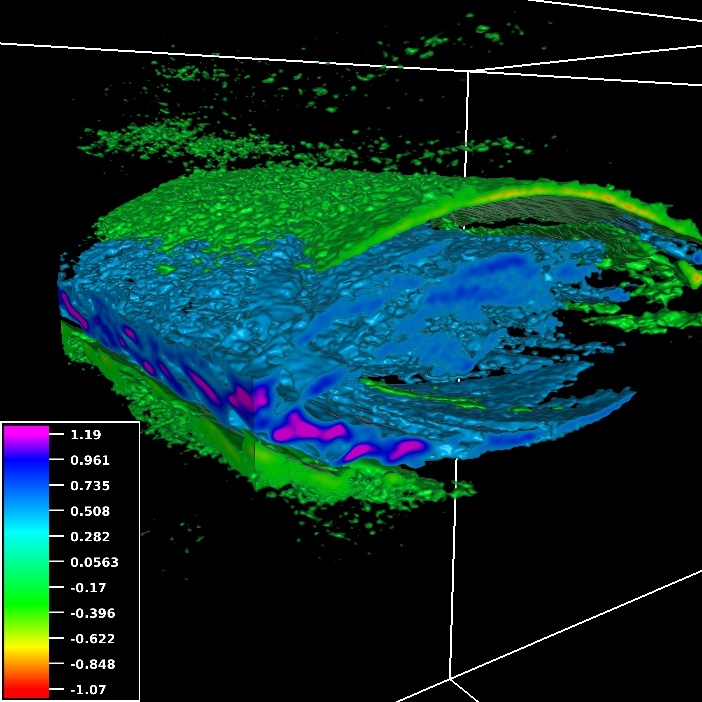

- John wrote data translators for two of the ASD awardees (Shay and Ireland) that are capable of reading their model outputs and generating VDC data (in the case of Shay) and Silo data (Ireland). The Silo data format is needed so we can take advantage of ParaView and VisIt's more advanced particle data set display capabilities. A number of preliminary visualizations have been produced, such as the image below from Shay's data:

Systems Projects

Security & Administration Projects

- Continued tracking HUGAP project progress. It is still not yet usable for production authentication and authorization purposes on GLADE resources. Worked on code to handle ingest of this data via LDAP and to marry it to system specific information sources.

- Added accounts for IBM personnel on picnic or glade as needed. Helped solve VNC connectivity issues for IBM personnel for installation and testing.

- Evaluated demos of possible NFS appliance packages for the NFS server for log file storage for GLADE servers and the compute nodes, and as a fallback service for the GLADE home directories. Worked on the evaluating the configuration of the hardware of the SGI solution, read documentation.

NWSC Planning & Installation

- In preparation for the DAV ATP, the parallel visualization package, ParaView, was built and tested in parallel execution mode on the storm cluster. ParaView will have to be rebuilt for Geyser/Caldera, but pre-building the application provided the opportunity to explore it's capabilities and put together a suite of benchmark data sets for load testing on the NWSC systems.

- Set up three file systems on picnic with different blocksizes (1M, 2M, and 4M) The goal was to evaluate the estimated performance penalty in analysis applications under increasing blocksize of the file system.

- Installed the fresh copies of HDF5-1.8.7, a release candidate version of netCDF-4.2.1-rc1 with support on in-memory operation, and the current development version of NCO-4.2.1 from the subversion repository for blocksize dependence tests.

- Performed the ncks tests using a single sample of 3.2GB netcdf file. Completion times of the identical command and the strace outputs were compared leading to the conclusion that larger block size can be favorable thanks to

the loop index change in ncks source code. Total bytes read are still more efficient with smaller block sizes, but the number of calls tend to negate the efficiency leading to the same order of magnitude in overall performance. (within a single digit percentage difference) - Had discussion with John Dennis Green part and Orange part of CMIP analysis workflow. Exchanged e-mails with John Dennis on his preliminary results on orange phase (split and concatenate operations on netCDF files)

- Performed average netCDF size survey on /glade/data01, getting 42.5MB from ~1.2M files. Biggest one was 442GB in size. Given this average, it will be safer to tune the file system toward the larger block size.

- Started picnic system rebuild on picnicmgt2 following the xCAT management server rebuild procedure provided by IBM. After firmware updates to prevent KVM hang, we installed the RHEL6.2 on a blank disk and configured the xCAT server based on the combination of glademgt tweaks and previous picnicmgt configuration. Major part of provisioning TNSD2 was done to automate the basic installation up to kernel update. Further work on IB kernel update and GPFS installation is under progress.

- Performed benchmark tests of IB connections on picnic with "qperf" and "perquery"

- Changed BIOS settings on the NSDs and applied a few settings in an attempt to get better performance over the

Infiniband HCAs. - Added accounts for IBM personnel on picnic or glade as needed. Helped solve VNC connectivity issues for IBM personnel for installation and testing.

- Evaluated demos of possible NFS appliance packages for the NFS server for log file storage for GLADE servers and the compute nodes, and as a fallback service for the GLADE home directories. Worked on the evaluating the configuration of the hardware of the SGI solution, read documentation.

System Support

Data Analysis & Visualization Clusters

- Configured a very preliminary ssh "forcecommand" script to allow DSS/rdadata to submit LSF jobs to mirage3/4 using ssh keys. This is in preparation for transition to new resources at NWSC.

- Remade the xorg.conf file on storm0/storm1 to enable a full resolution display to allow John Clyne to test Paraview.

- Disabled the user sync on twister to prevent active accounts from being closed now that frost has been taken offline.

GLADE Storage Cluster

- Started rebuilding 76G after it was disabled from media error.

- the mich1098 project space for the DCMIP project.

- Increased several approved users quotas on /glade/scratch

- Worked with Paul Goodman to allow two new clients to mount GLADE filesystems.

- Generated a list of all files with a GID < 1000 and != 100.

Data Transfer Cluster

- Continued to sporadically help Si to try and figure out possible causes of slow GridFTP transfer performance from NCAR to other sites (NICS, ORNL) when Globus Online is managing the transfer. There is a definite asymmetry in the speeds for inbound to GLADE (faster) vs outbound from GLADE (slower). Due to NWSC tasks and lack of knowledge of the intermediate network paths, there isn't much we can do. The GLADE file system itself does not appear to be the bottle neck.

Other

- Built the HTAR binary for a RAL system in coordination with Carter Borst. This case posed a challenge with failure in functionality tests. Troubleshoot based on the trace of network connections revealed that this particular host had a multiple network interfaces with an identical hostname, which led to hung

HTAR transfers to internal network. Revised the wrapper script to force the connection to the external interface to make it work. Responded to further questions on usage from the new host, and pointed them to the official user guide by Michael Gleicher.