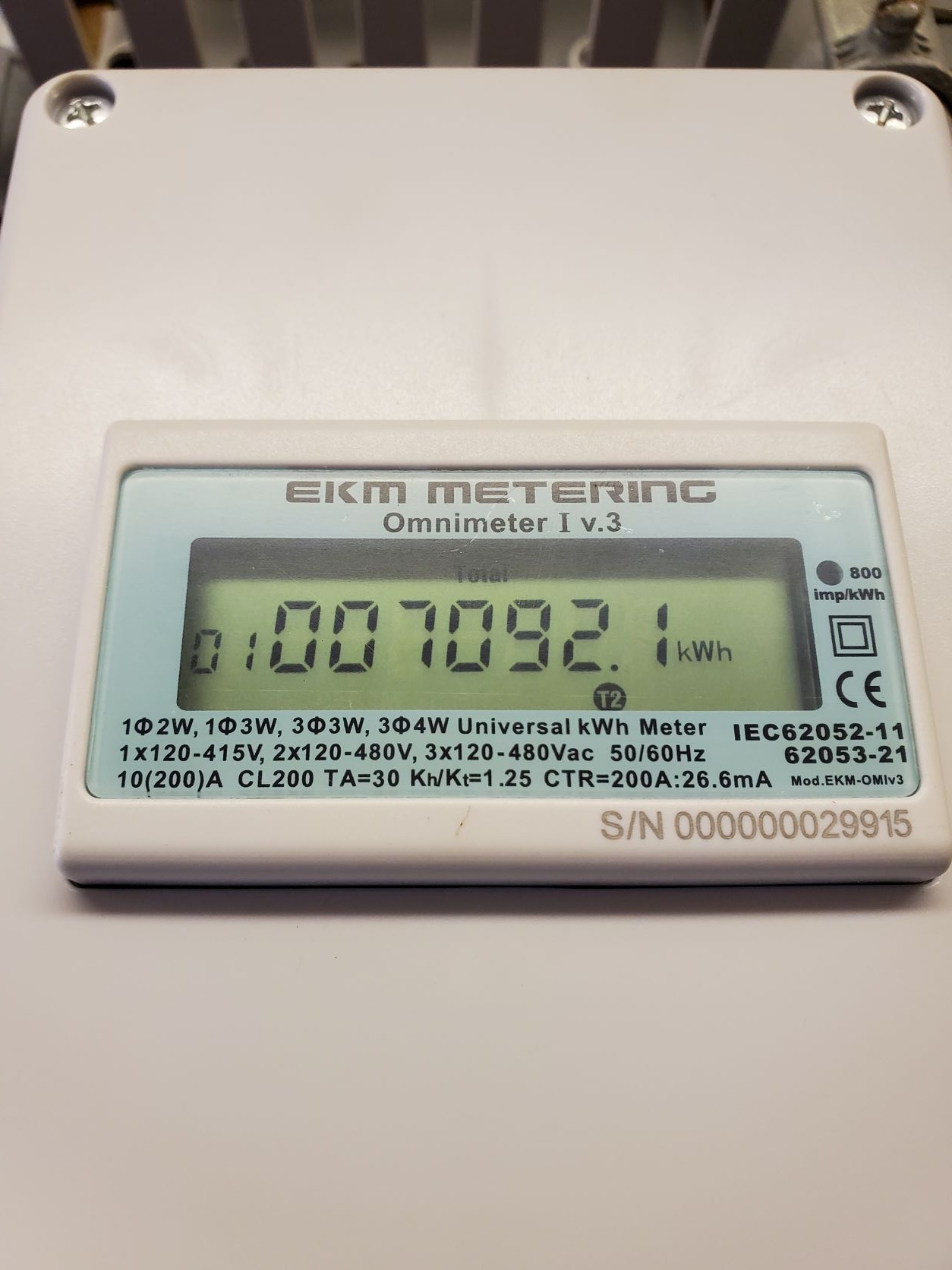

The trailer and pods are ready to go. Took a picture of the power usage and emailed to John.

The bolt that holds the back stairs of the trailer is missing... and the arm that it is attached to is seized up. I have it safely stowed with chain, lock and straps. Trailer pickup is scheduled for 8am tomorrow. PODs has an all day window for pickup.

I brought the GSA in for a good washing. It is of acceptable cleanliness.

Progress. Clayton and I got the PODs and Base Trailer ready for the final touches. Gary has returned to Boulder. Clayton has plans to leave midday Wednesday. We plan to disconnect power (take pictures of power usage) and do the final packing Wednesday morning. Also, I am going to wash our trustworthy, but currently gross, GSA Pickup.

I'm gonna take a down day tomorrow. Whale watchin!

All SWEX site equipment has been removed from project locations and accounted for.

Spent time organizing the PODs and Base Trailer today. Submitted final soil sample form. Clayton and I are likely to finish travel prep Monday and Tuesday. Gary has been honorably discharged from field duty. Clayton is planning for a Wednesday return.

Planning for a down day Wednesday.

Pickup is scheduled for the PODS and Base Trailer on Thursday (2nd).

A Happy Memorial Day Weekend to all!

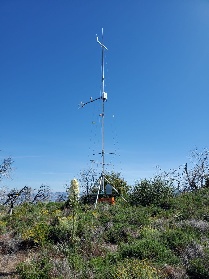

Another flawless tear down. Soil sample same as yesterday; used the tin cap and a large cylinder ring to "scoop" a sample about 1.5" below surface. Pictures sent to forest dept:

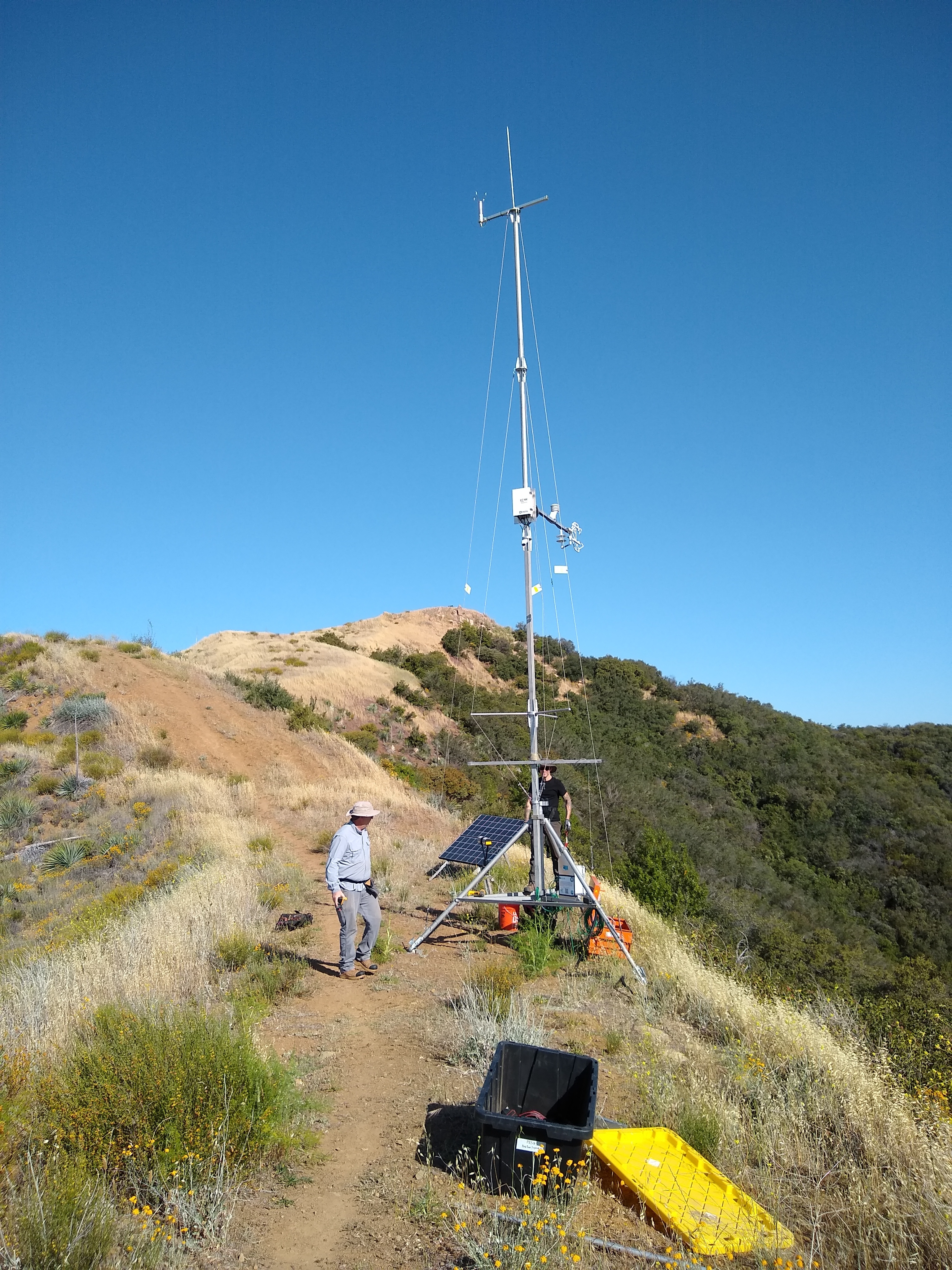

Sunny and clear after a week of beach fog:

Bee Bush and Yucca:

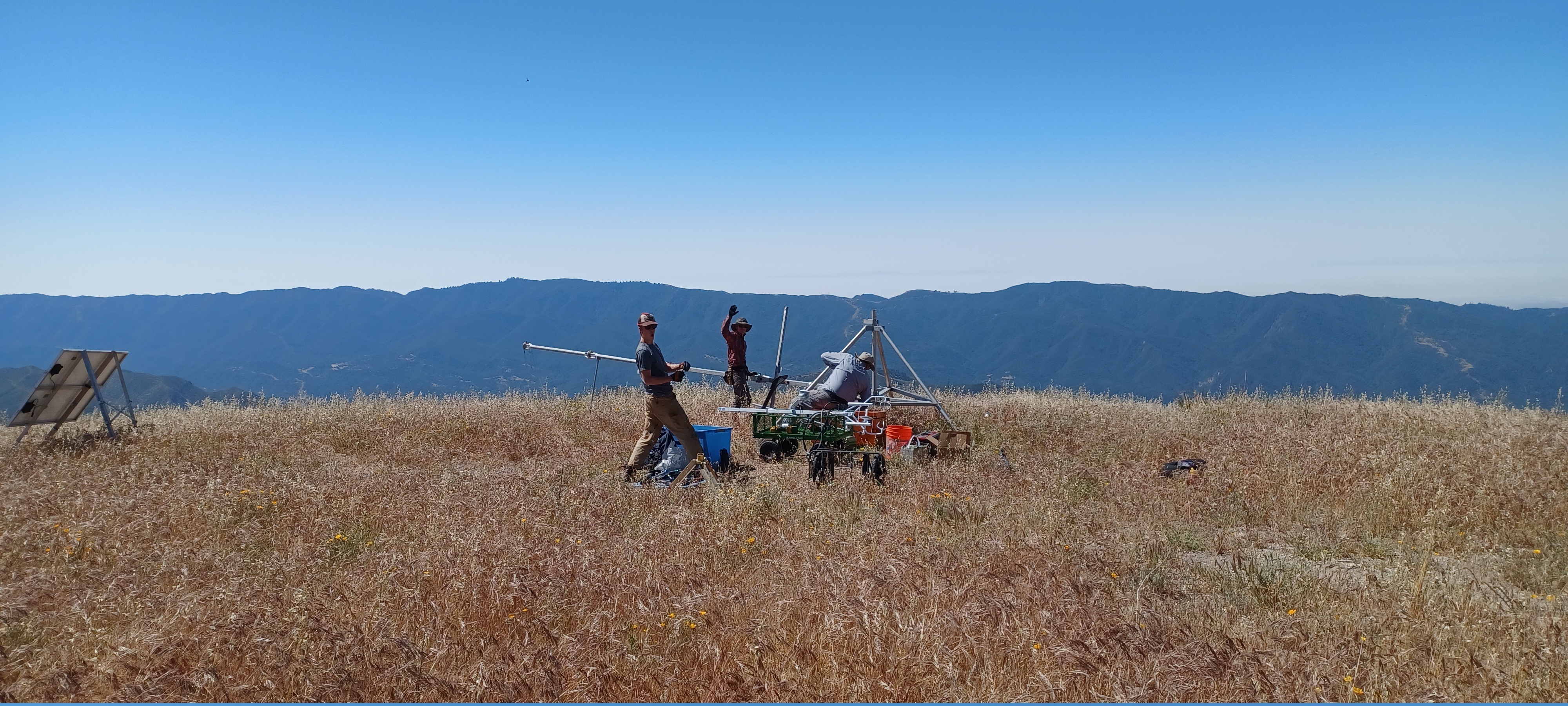

Tear Down Team Promotional Photo :

Gary estimates a new tear down record of 96min (unofficial). Impossible to use soil cylinder to collect a sample here. Large rocks would not allow a cylinder depth of more than 2". Collected soil sample by "scooping" soil into tin using a large cylinder ring and back of tin cap; rocks/gravel included. Took pictures of site for forest dept. Spent some time organizing back at base too.

This quad disk set a new SWEX weather sensor record for distance found from original mounting location at 15m

Lovely views of the Pacific:

And surrounding mountains:

Another successful tear down. Soil sample obtained and pictures sent to Forest Dept. Two sites to go. Team morale remains high.

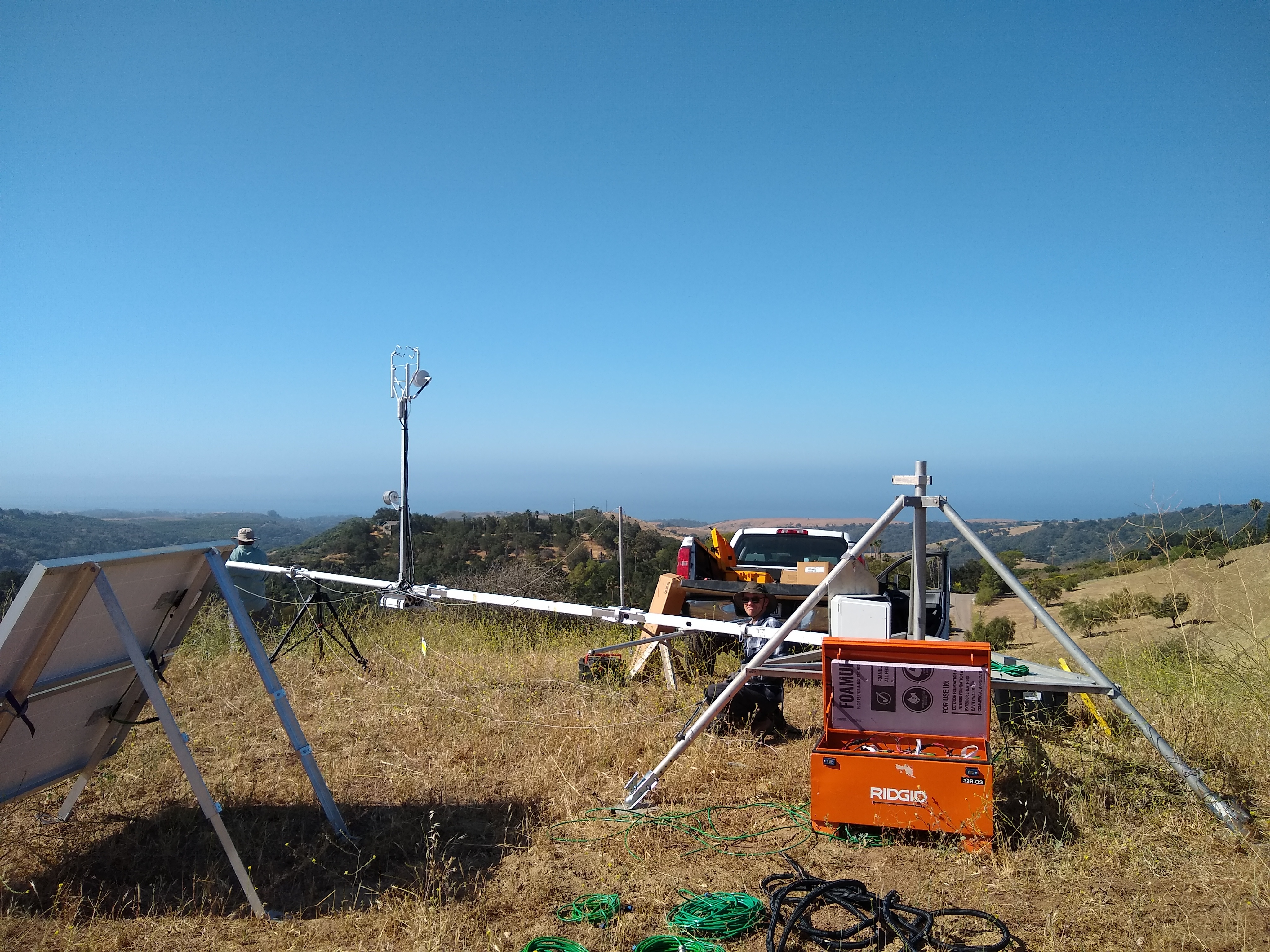

And with a shot of the team in action:

Obtained soil sample and pictures for forest service. Appreciate the crew for putting up with my off-road driving.

In the photo below, we are considering research for a future scientific paper, titled "On a metric for the difficulty of portable mesonet site access and it's correlation with scientific value."

We removed the Little Pine site today. Everything looked good at the site. Although there was a suspicious half eaten bagel laying in the grass near the solar panel. Emailed before-and-afters photos of the site to the Forest Dept.

Also mailed the satellite phones back to the rental company.

This picture is included so we can later annotate it with the SWEX sites on the far ridge, along the Camino Cielo, since we thought we could see them all.

ExxonMobil (s5)

Thanks to help from David and Cole, teardown went smoothly and quickly. Piles of bugs removed from the job box.

(this from Tony, moved from front page:)

Last week went really well and we were able to stay on schedule with 2 sites a day. Today we got extra help from David and Cole at Exxon. Remaining sites include: Cuyama, Little Pine, Figeroa, Gaviota Peak and Santa Ynez. I am taking soil samples for each of these. Our contact for the remaining sites is Veronica Garza from the forest service. She is currently out on fire assignment and has requested that I email her before and after photos for this week's sites.

Rancho Alegre Scout Camp (s13)

Pleasant day, grass was tall but not a problem. The tape around the radiometers had started peeling off.

Sedgwick Reserve (s14)

Hot. No pictures, no problems. Also retrieved the Ubiquiti radio and cable from the Sedgwick AP tower.

Reagan Ranch (s7):

In the clouds and almost chilly. This site swallowed the grounding rod and would not give it up. I took no pictures because visibility was a few hundred meters at best.

Hazard Ranch (s6):

No pictures here either, and no issues other than needing to ask slack for the code to the unexpected lock on the front gate.

Day 2

S2 - Frinj Coffee S1 - Station 18

Day 3

S18 - Windemere S9 - Camino Cielo

Yesterday was my last day participating in teardown. Gary arrived yesterday and replaces me.

We also took the 3rd round of soil samples at Windemere and Frinj Coffee sites. Tony will take over soil sampling for the rest of the sites during teardown.

Many thanks to Tony and Clayton for making my first experience with teardown enjoyable!

Montecito (s11) first.

View from Montecito during teardown. Cloudy and cool on the coast, but warm and very windy at the site.

Then La Cumbre (s10).

La Cumbre was not quite as windy. We had a nice conversation with one of the county workers who was up there, explaining the site and instruments and our field project work. We should have cards to hand out to invite people to apply to work with us.

It was Tony, Clayton and myself. We had an early start to avoid working the hot afternoon sun. Tony did a great job prepping the two trucks - we had everything we needed.

S4 - San Marcos S3 - El Capitan

The second POD arrived today and we cataloged and stored the tower equipment there. The equipment in this POD will get sent to Marshall.