10m.c (SN 1473) read high and 15m.c (SN 1390) read low during the project, so we want to know what is going on. Some input:

- The edges of the lens on 1390 were cloudy when inspected in the lab

- In the staging lab, I fired up both along with SN 1494 which had been in the lab. The h2o values were:

- 1494: 8.68 g/m^3

- 1390: 3.60 g/m^3 (5.08 lower than 1494)

- 1473: 9.21 g/m^3 (0.53 higher; 5.58 higher than 1390)

- We just did the lab zero/span test. During that:

- 1473: zero 0.71 (3.33 higher than 1390); then zeroed

- 1390: zero -2.62; then zeroed

- h2o span is still in progress

- After the zero/span, repeating the staging lab test:

- 1494: 7.98

- 1390: 9.55 (1.57 higher than 1494)

- 1473: 9.21 (1.23 higher than 1494 and 0.34 lower than 1390)

This seems to indicate that the zero/span process does actually change the output values. Now I'm even more puzzled! (As to why these sensors immediately had large offsets between the pre-SOS lab calibrations and the first data taken in the field).

Doing this after the fact, so may be incomplete (but written jointly by Steve, Chris, and Tony):

Weather was fantastic: warm, partly cloudy, not too windy.

After every break, a load was brought back to the U-Haul (parked at RMBL). The 2 track to kettle ponds was muddy but passable with the GSA trucks.

Tues: Onsite: Chris, Tony, Will, Josh, Justin, Terry, Brigitte

- Performed safety brief (Need to update the JHA form to flow with briefing)

- remove PI sensors and started removing all sensors that were reachable from ground level.

- removed all sensors from UE and UW

- removed all sonics from Center tower

- Lowered UE (in one piece)

- removed all power boxes and power cables (NOTE: BX Cable survived just fine under the snowpack)

Wed: Onsite: Chris, Tony, Will, Josh, Justin. Terry and Brigitte left at 1, replaced by Steve

- Lowered UW (in one piece used pulley system attached to anchor)

Would it be possible to use a GriGri or elevated safety to lower towers - removed all TRHs and DSMs from Center tower

- removed all equipment from D

- removed anchors as towers were lowered

Afternoon break, then:

- Took soil core

- Lowered d tower (in one piece). Only had one person on a side guy and tower slipped that way during lowering. Should have had someone on other side guy!

- Removed soil sensors

- Dismantled c tower from 70' to 20'

- Left site about 1845 – another long day!

Thurs: Onsite: Chris, Tony, Will, Josh, Justin, Steve, Elise, her tech Asa

- removed snow pillows

- lowered the last 20' of c

- organized anchored hardware during disassembly

- cleaned up site

- Left site with last load about 1200

- Cleared out RMBL storage room and Packed up the U-haul

As part of teardown, we took a core sample yesterday 21 June, at 3pm:

Tin: 21.21g (tin #2)

Wet: 98.24g

We set up the sample for 1–4 cm depth

We'll bring the sample back to Boulder tomorrow for the dry weighing.

We couldn't figure out how to log into the "d" DSM using our phones. We did reconnect it (with a mote) and ran it for about 30min off the battery backup, so there should be a tiny bit of data on d's USB stick. If for some reason this didn't work (we couldn't monitor it), we could just use the reading from the day before when the DSM was running normally.

I am using the last few days of SOS operations to test DSM software changes. So far the only DSM that has seen an interruption is cb, but others may follow.

There was an interruption to the real-time data beginning about 30 minutes ago while NIDAS was updated on barolo. The data flow appears to be working again, but please let me know if anyone notices any problems.

The NIDAS version on barolo is now the latest version, and it includes a significant change which removes nc_server as a dependency. Instead, the NIDAS nc_server outputs are now installed with nc_server, and the DSOs are loaded from there at runtime by NIDAS.

A friendly reminder to those participating in teardown to collect the 3rd and final soil sample.

As of today, I don't see any snow inside the tower triangle on the camera. Yesterday, a bit still seemed to be present around the uw tower.

Today we worked with the RMBL crew to move all of our equipment and sensor cases out of the tent behind Crystal Cabin into the Salamander room of the Lab and out onto the patio. All of the CSAT/EC150 cases, a pallet, and the davit are covered by a tarp and under the roof. I also ended up leaving the spare pyrgeometer and pyranometer I packed in the tote with the spare radiometer fan cable/mote cables.

As of today the road to Gothic is open. David and I were able to hitch a ride on the way out making for a speedy trip back home.

The SOS router and everything behind it is unreachable as of about 1:10 pm local today. This shouldn't be related to the ISP down time that happened yesterday, but at the moment I have no idea what's wrong.

Adjusted Guy Wire tensions

- UE - 2 full turns of the turnbuckle

- Fwd R - 165 –> 200

- Fwd L - 180 –> 260

- Aft - 160 –> 200

- UW - 2 full turns

- Fwd R - 90 –> 200 (6 turns) - We made sure to use 2 levels after all the tensions were verified

- Fwd L - 105 –> 200

- Aft - 100 –> 240

- D - 2 full turns

- Aft L - 190 –> 295

- Aft R - 190 –> 330

- Fwd - 179 –> 300

- C - 2 turns

- Aft outer - 250 –> 340

- Aft inner - 200 –> 320

- Fwd R outer - 250 –> 300

- Fwd R inner - 160 –> 300

- Fwd L outer - 260 –> 340

- Fwd L inner - 205 –> 350

Moved Booms/CSAT/EC150 from 2m to 1m

- upwind west at 11:32

- Upwind east at 11:45

- Downwind at 12:14

Swapped USB sticks

- UE

- Pi-1 at 11:56

- Pi-2 at 11:56

- D

- Pi-5 at 12:48

- Pi-6 at 12:48

Cleaned Radiometers

- UW at 11:46

- D at 11:15

Troubleshot 9m Radiometer

- Replaced mote at 1:07

Cleaned Gas Analyzer lenses on C

- 10m at 2:08

- 15m at 2:12

- 20m at 2:15

- 5m at 2:19

- 3m at 2:22

- 2m at 2:23

- 1m at 2:21

David Ortigoza and I drove into Crested Butte last night. Cottonwood pass was said to be open by multiple news sources... But unfortunately it was not. On the plus side, we got to see a herd of Big Horn Sheep before we had to turn around. We took Monarch pass into the Gunnison Valley.

We left this morning around 07:30 and were on the trail by 08:00. We were able to make it into Gothic within an hour. The road in was dry and an easy hike. We dropped off our gear and headed to the site after picking up some spare mote cables and wipes from the Salamander Room. The trail going to Kettle Ponds is pretty muddy with one 20ft section of 11" snow. The snowmelt/runoff flowed over the trail on at least two accounts. The actual Kettle Ponds were filled and looked very beautiful reflecting Gothic Mountain.

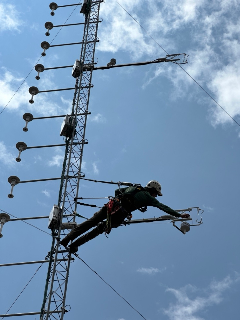

We were very busy and successful while out at the site. We stood up the barrel, checked guy tensions, moved booms/sensors, switched LiDAR USB sticks, reset UE Pi 1, troubleshot the D 9m radiometer, cleaned both sets of radiometers, cleaned gas analyzer lenses on C, and finally collected flags.

Rumor is that the road will be open tomorrow, 5/24. Also, we have not heard back from Ben nor the other caretakers to coordinate moving the sensor cases from the tent to the Salamander room. I will add another blog post giving the specifics of what the guy tensions were and what we adjusted them to, along with times the sensors were moved, replaced, or cleaned.

The ISP for the SOS site, XTREME Internet, texted a notice to Rochell that the Internet connection will be down for maintenance between 9am and 2pm on Wed, 5/24. They did not say for how long, so maybe it will be a short interruption. This means all real-time data from the site will be interrupted, but everything should catch up when the connection is restored.

As of today (5/14), these snow pillows appear to be bare of snow.

Jacquie noticed that the d.9m radiometer data stopped coming in at 16:47 yesterday (5/11). This morning, the mote was reporting only its boot-up messages, and cycling through them. I think this indicates a bad sensor that pulls down the I2C line and thus creates an error in the mote's program when it starts to power the I2C lines. I became distracted and when trying a few hours later, I get no response at all from this mote after several pio power cycles and even a ddn/dup. Looking at the high-rate data, I see good messages from all 4 radiometers until 15:39, and then none. This gives no clue as to which (if any) radiometer went bad to cause the reboot cycling.

I can't think of anything else to do from here. I'll add debugging/servicing of these sensors to the task list for the next site visit, which will be in a week and a half. At the very least, we will send out another V2.7 mote. If this has issues, we can systematically plug each of the radiometers in to see if one is bad. If so, we might have to leave it disconnected, since changing out a spare would be a major task. (Though, perhaps possible.)

For Will and David on the last maintenance visit:

- Debug/fix non-reporting d.9m radiometers. Take a spare V2.7 mote.

- Clean radiometers

- Change out lidar USB sticks

- Move d, ue, and uw lower sonics to 1m

- Dig out the barrel and move to the ground, if possible and/or needed.

- Barrel down as of 16:00 May 15, 2023

- Move crates and equipment out of Quonset Hut

- Check all guy wire tensions

- Clean EC150 lenses (with alcohol wipe) on 10m.c and 15.c, and others if you feel like it.

- Power cycle Ethan's raspberry pi on UE (pi1). If not labeled, power cycle both.

- Remove flag trail markers