A warm sunny day with occasional breezy periods. The SWEX campaign is over and teardown has begun, starting at the ISS1 site. We'll be working here for 2 or 3 days, then moving on to ISS3 at Sedgwick and finishing at ISS2 at Rancho Alegre.

At ISS1, the DM computer's main ds data directory has been backed up to two USB drives, one of which also holds the Modular Profiler raw data set copied from the profiler computer. The DSM thumb drive is now in Jacquie's procession. The profiler clutter fence has been removed and is ready for pickup tomorrow and the wind lidar is ready for a crane lift (also tomorrow).

Cole and David working on the Modular Wind Profiler and Liz and Tony taking down the met tower at ISS1.

The final day of the SWEX campaign was another warm day. There were some upper level clouds that built up during the morning but those mostly disappeared during the afternoon.

Lou and Liz went to Sedgwick to prepare for teardown, and dismantled the acoustic surrounds on the RASS. The wind lidar data transfers are going more smoothly, and Isabel reports that she managed to fill in the gaps of data that had failed to transfer. She also regenerated the cfRadial, VAD and consensus products so the lidar data on the DM should be complete.

I had a quiet day, mostly catching up on documentation. I did run another range calibration test on the Modular Profiler. The delay line and patch were on the channel 2 antenna from 0028 UTC for around 20 minutes (May 16 UT time). The peak was still at 3km as it should be. The power drawn by the final amp remains stable, drawing 3.28 A on the 50V supply and 1.77A on the 31V supply. The profiler, like most of the other ISS systems, has been stable and performed well throughout the campaign.

Thank you to all involved for keeping all three ISS systems running so smoothly. Teardown will start tomorrow morning, beginning at the ISS1 site.

Another fine day in Santa Barbara. The final IOP of the campaign ended this morning with the last sounding of SWEX launched at Rancho Alegre ISS2 by Marion and Gert-Jan. Apparently it was an interesting Sundowner with a more eastern focus than previous IOPs.

We started off at the ISS1 site checking on systems, then David and I went off to Montecito Fire Station number 2 (a very nice mission style building) to pick up Helium and equipment for the UCSB folks (they had did soundings there for last nights IOP), then took that equipment back to the University. Lou went to Sedgwick to do some checks and attempted to inflate a flat tire on the trailer.

As mentioned previously, the wind lidar seems to be having problems with data transfer, so after the IOP I adjusted the scans to slow down the data rate. It is the same scanning strategy, (vertical stare, PPI scan for VAD winds, and RHIs in the north-south and east west planes), but at lower resolution and a slower sampling rate. The cycle is now 15 minutes (previously it was 10), and now we're collecting about 7-8 MB per hour instead of around 32 MB per hour. Hopefully this will allow the data transfer to catch up before we shut down on Monday.

Terry visited ISS1 in the afternoon for a tour and discussions, briefly interrupted by a roadrunner passing by the site.

David moving Helium in Montecito and Terry touring the ISS1 site

Another sunny day with a little high cirrus cloud and light winds. There was another wildfire in the foothills northwest of Goleta this afternoon. This one was in Winchester Canyon was under control fairly quickly.

We did some cleaning up around the ISS1 site, whacking weeds with a weed eater and shears. A lot of tall mustard weed and other plants have grown up around the site and we need to these away from equipment prior to teardown. We took a load of Helium cylinders from the UCSB Fire HQ sounding site to the university, then another load from the SJSU site in Gaviota to the university. Lou and Liz moved on to the Rancho Alegre site and did some cleaning up there.

The wind lidar data transfers seem to be going more smoothly and seem to be keeping up with realtime. The slower scanning strategy with lower resolution in time and space appears to be helping. Isabel is running scripts to fill in some of the gaps in the earlier data and thinks we will get all of the data transferred before shutting down the lidar on Monday.

Wildfire in Goleta Hills.

A warm, sunny and breezy day. IOP 10 started this afternoon to study an eastern Sundowner event. This is a mini IOP, without the Twin Otter since all of its research hours were used up. There are also limited soundings, with just one ISS launching (Rancho Alegre) and with UCSB soundings moved from the FIre HQ to a temporary setup at a fire station in Montecito.

Lou and David started soundings from Rancho Alegre at 4pm and also did the 7pm, then the UCSB team took over for the nighttime soundings. David also helped UCSB with the move to Montecito. Jacquie went to Sedgwick to work on the ISFS 14 station since it is having communication difficulties and Lou also went to Sedgwick to check on the ISS trailer.

John and Laura left today (thank you for all your work here) and I arrived this afternoon.

The networking issue with the wind lidar at ISS1 continues. We have noticed that there have been increasing delays with the data transfer, particularly over the past few days. Only around 60% of the data is being transferred and around 20% of that is not being converted to cfRadial format suggesting that those files may be corrupted. Isabel thinks the issue may be with the database on the lidar that generates the netcdf files. It appears that generating the files is now taking longer than the sampling time so the system is not keeping up with real time. Earlier on in the campaign, the generation and file transfer process was much faster, even though the scanning and sampling strategy hasn't changed. She has written a detailed email to the Vaisala support team and they have forwarded this to the Leosphere folks in France. Tomorrow (after the IOP) I plan to slow down the data collection rate in the hopes that might allow the system to catch up. It appears that the data is okay in the lidar database, just the netcdf generation and data transfers are running very slowly. We should be able to recover all the data eventually, although we may have to wait until the system is back in Boulder to get it all off.

Bright, clear day, cool, with light surface winds early but a very windy afternoon.

All ISS systems were operational throughout the day. Laura and I launched successfully launched soundings throughout the day and into the evening with no issues. There were some very high winds aloft, up to 64 m/s (>140 mph) that carried the balloon sondes over 145 km down range. The first two soundings of the day both went ~145 km down range, but ended up less than 5 km apart, so the winds were pretty consistent. Below is are images of the first sounding of the day where you can see wind 60+ m/s wind barbs and the path of the balloon, ending up southeast of Bakersfield.

10:00 AM sounding track.

Internet access at ISS2 has slowed to a crawl. I initially reset the Cradlepoint and replugged the network cables looking for a loose connected. I moved the 5G modem to different locations with no improvement, and I also power cycled the network switch and rebooted the data manager with neither resulting in any improvements. The modem says we hit 25 GB of data, so it is suspected that a data limit has been reached and our data rate has been throttled back. Gary is checking into the cell modem plan to confirm and see if things can be sped back up.

A beautiful day in Goleta - sunny and clear with a high in the low 70s. Breezy in the afternoon as well.

I met with Lou this morning, gave him a key to the green truck. All ISS systems have been working throughout the day. The issues with the ISS1 lidar still persist on Nagios, but no other errors seen on any of the systems at all today. Real-time plots appear to be updating for all three sites as well. With all systems running and no IOP today, I was able to work from the hotel today. No updates from Lou thus far today.

Laura and I wrapped our OPS shift today. Laura drove down to LA this evening while I leave tomorrow on a mid-day flight back to DIA.

A nice clear and cool day with strong winds most of the day. A line of high cirrus clouds also passed over the area in early afternoon.

Overall, a quiet day for ISS. After rebooting the ISS1 data manager last night the USB camera did not come back up. Laura and Jacquie stopped by and unplugged and re-plugged in the USB cable and the camera came back online. Otherwise, all systems have been fully operational throughout today, though the lidar at ISS1 is still showing some of the same errors that have been appearing at this evening's systems check.

Tomorrow we will launch the soundings again at 1000, 1300, 1600, 1750, 1900, and 2030 local time as part of the final IOP for the project, IOP 9. Should be another long and productive day.

Low cumulous clouds this morning that cleared by noon. Sunny the rest of the day but under cool, strong winds. Slightly hazy also from dust kicked up by the winds.

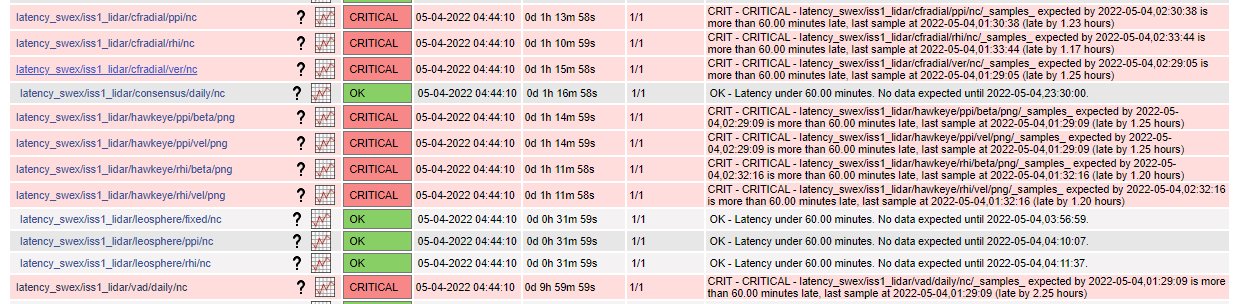

All ISS instrumentation has been operational throughout the day. There are 12 critical issues in the ISS1 Nagios concerning the lidar at ISS1 that I am assuming have to deal with the data getting transferred in time and is not an instrumentation problem. Let me know if otherwise and a trip to ISS1 is required. Some of the real-time web plots are still not updating, i.e. the latest ISS2 Ceilometer plots are from 2022/05/03 and 2022/05/02, respectively.

A day full of balloon launches. I met Laura at ISS2 and launched the 10:00 am sounding. Jacquie joined us shortly thereafter and recorded Laura and I filling the balloon, connecting the spooler to the balloon, and launching the 1:00 pm sounding to add to our sounding documentation. Laura and I then joined Jacquie on a visit to ISFS Rancho Alegre site to take a soil sample. The wind was already blowing in excess of 10-15 m/s while we were up on the ridge collecting the sample.

Jacquie and Laura after collecting the soil sample at a very windy Rancho Alegre site (looking NW).

After getting lunch in Santa Ynez, we returned to ISS2 and Jacquie left us to process the soil sample and attend to other data QC tasks. Laura and I launched the 4:00 pm, 5:30 pm. 7:00 pm. and 8:30 pm soundings without any problems. We used the shelter of the POD to shield us from the gusting and swirling winds of the valley at ISS2 while we filled the balloons. During launching, the balloons could be seen encountering some powerful wind shear, gusts, and even a couple of downdrafts as they ascended out of the valley. Once clear of the valley, the balloons and sondes caught some strong WNW winds that took them downrange at rates up to greater than 40 m/s. Because of the strong winds aloft, and because the sondes went directly over the ridge east of the site, radio telemetry was lost relatively quickly, as soon as 30-40 minutes after launch. The soundings were terminated by the Vaisala software after the base station stopped receiving telemetry from the sonde for some minimum amount of time. Thus, some of the soundings concluded at altitudes/pressure levels between 170-250 mbars. An example of how fast and far the sondes were travelling along with the intermittent telemetry is shown below for the 4:00 pm soundings where the sonde travelled over 103 km in ~58 minutes (for an average ground speed of ~66 mph!).

4:00 pm sounding showing loss of signal, how far the sonde travelled in 58 minutes, and the software terminating the sounding as a result.

Other soundings terminated even sooner due to the sondes travelling faster, getting further down range quicker, producing low angles above the horizon to the sonde, and resulting in the loss of signal due to the ridge east of the ISS2 site.

Finally, the end of a long weekend of launching! Time for the students to take over.

Clear and sunny again all day today across the region but cooler and breezier. Conducted a Half-IOP this afternoon and evening.

At ISS3, I enabled the Best Power 610 UPS by pressing the "UPS ON Switch" for a couple of seconds, which cycled the Load Level/Runtime LEDs and then turned off the Bypass LED and turned on the Inverter LED (see image below). This put the UPS into it's 'normal' operating mode.

Best Power 610 UPS front panel buttons and indictors.

To test the UPS, now in the correct configuration, I stopped the radar from running and unplugged the UPS's line power. The radar computer and electronics turned off again immediately as did the UPS, though it did momentarily illuminate the Battery LED indicating it tried to run off of batteries. When I reconnected the UPS to line power the UPS came on, but entered its "Bypass" mode, since the batteries did not work. If the batteries were able to carry the load I believe the UPS would revert back to its "Normal" mode of operation. With a fully depleted battery, it comes up in the "Bypass Mode" to recharge the batteries and will not connect the batteries to the load if line power is lost unless it is manually put into "Normal" mode by pressing the "UPS ON Switch" again for a few seconds. I retried this test with no load connected to the UPS and it again turned off almost immediately. So it appears that the batteries in the UPS are not charging up or holding any level of charge.

I then met Laura at ISS2 for an afternoon and evening of balloon sonde launches. The wind was already starting to come up when we launched the 4:00 pm sounding, and continued for the later soundings as well, yet we had no problems conducting the launches. We even filmed how we fill the balloon for Brigitte! There are some pretty good winds already with this Sundowner even, and more forecasted for tomorrow, so tomorrow should be an interesting day of launches.

Clear and sunny all day today across the region. Cooler along the coast with a high in low 70s while warmer inland with highs near 80F.

All ISS systems have been running without issues again today. Some issues with the webplots still persist, as some ceilometer and profiler consensus plots not up to date on the ISS2 webplots page as of this writing.

Jacquie, Laura, and I all went to Sedgwick this afternoon. While Jacquie and Laura went to the ISFS tower at Sedgwick, I did some work on the UPSs in the ISS3 trailer after the failures that occured when the power went out on Wednesday night during the last EOP. The systems in the trailer were powered as noted below:

CyberPower OR2200LCDRT2U UPS #1, black, located on the floor beneath the sounding system: Sounding computer and monitor, MW41 receiver, and cell phone booster. Output load current ~118W, as indicated by the UPS.

CyberPower OR2200LCDRT2U UPS #2, black, located on the counter between radar system and electrical panel wall: Data Manager computer and monitor, Ethernet switch, Ubiquiti POE supply, ceilometer computer and monitor, and all-sky camera power. Output load current ~174W, as indicated by the UPS.

Best Power 610 UPS, cream color, located on the floor beneath the Data Manager computer: Radar computer, monitor, radar Power Supply Unit, tower power, ceilometer power cable, network temp sensor, and USB camera(s) power. It appeared that the 610 batteries were not charging looking at the LED status lights on the front of unit, as indicated below:

LED status panel of the Best Power 610 UPS

I then turned the main breaker off in the trailer at ~2142 UTC to simulate a power failure. The wind profiler electronics and computer went off immediately, which indicated that the Best Power 610 UPS is not working. The CyberPower UPS #1 also instantly turned off, which took down the sounding computer and MW41 receiver. However, CyberPower UPS #2 worked as expected and the Data Manager and Ceilometer systems remained running while the UPS indicated that the UPS could support the load for ~140 minutes.

When the power was turned back on (at ~2144 UTC) both the CyberPower UPS #1 and the Best Power 610 UPS came back on. The CyberPower USP shows a fully charged battery, but the system repeatedly beeps 3 times while flashing the battery icon. I checked the available documentation on CyberPower's website but did not find a meaning for this combination of indicators.

With power restored, the sounding system restarted on its own and booted to the login screen while the MW41 receiver came up to standby power, meaning one must manually turn receiver on, login to the computer, wait for the MW41's green light to stop blinking and turn solid, then start the sounding software to get the whole system back up.

The radar electronics came back on and the profiler computer did restart on its own and booted to the login screen. I logged into the radar system under the LAPXM login and, to my surprise, the radar started running before I opened the LAPXM Console program. I opened the Task Manager and found no applications running under the LAPXM user, but upon viewing tasks for all users, I saw tasks named LAPXM_Main.exe, LAPXM_Rass.exe, and LAPXM_ManageDiskSpace.exe running under the SYSTEM user. When I started the LAPXM Console program a LAPXM_Console.exe process appeared under the LAPXM user in the Task Manager. Using the Console, I could stop and unload the radar program, but the three LAPXM_xxx processes under the SYSTEM user remained running, and also remained running after I closed the LAPXM Console program (which did end the LAPXM_Console.exe process). I then rebooted Windows on the profiler computer and logged in again with the LAPXM login. The three LAPXM_xxx processes under the SYSTEM login were again running after the restart, but the radar remained idle. It appears that the three SYSTEM processes remember the last state of the radar even after the system loses power and reboots when power returns. Good info to know. Normal radar operation was restarted at 1958 UTC.

I then turned to the CyberPower UPS #1. I tried to run the unit's self test, but the system turned off, perhaps because of the load attached. I disconnected the load and ran the self test again. This time the UPS did not turn off, but did beep once with no other indicators illuminating. Upon reattaching the load, the UPS again started to intermittently beep three times with the battery icon illuminating during the beeps. I plugged in the alarm clock as an easy load, and disconnected the line power to UPS. The UPS did not turn off but was only able to support the clock load for a minute or two before turning off. All signs point to a bad battery or some other fault in the UPS.

I then moved the loads plugged into the bad CyberPower UPS (#1) to the good CyberPower UPS (#2). The draw on the (good) UPS was now ~320W and the UPS indicated that it could support this load for ~60 minutes. I then moved all other loads except for the radar (i.e. the tower power, ceilometer power, USB camera power, etc.) from the Best Power 610 UPS to the good CyberPower UPS (#2), which initially increased its load to ~520W & ~40 minutes of support, however, this level came down to ~340W with ~60 mins of support available at this level. *** This load was further decreased to ~250W & ~100 minutes of support when the monitors of the sounding, data manager, and ceilometer computers were turned off. So it is highly recommended that the monitors not being actively used be powered off with there respective power buttons to maximize the amount of time the UPS can support the systems in event of loss of line power.

UPDATE: This evening I looked at the manual for the Best Power 610 UPS and determined that the above status lights on the front display indicate that the UPS is in its battery charging mode. In this mode power is available at the outlets on the back of the UPS but the battery backup feature of the UPS is NOT enabled. The indicator LEDs on the front panel, pointed to by the red arrow, shows the load level on the system in this mode.

Per the manual, "After completing the installation and battery recharge, switch on the UPS by pressing and holding the front panel switch I for one second. The unit will now commence a system check sequence before establishing its own inverter power. At first, all the Load Level LEDs will illuminate together, and then one by one. Within a few seconds the Inverter indicator will illuminate, indicating the inverter has now started and the bypass indicator will extinguish, indicating that the UPS is now in normal mode. You may now start the equipment connected to the UPS." Once switched into 'normal' mode, the indicator LEDs will show the amount of battery capacity remaining as a percentage of the full battery capacity. I plan to make a trip out to site 3 tomorrow before the start of the half-IOP to make sure the system is in the correct configuration and may see if the unit is indeed operational.

Overcast and cool this morning along the coastal region with skies clearing by late morning. High in the 60's with a breeze this afternoon.

All ISS systems were running throughout the day without issue. Jacquie noticed this morning that some of the the web plots were not updating even though the instruments were running. Isabel and Bill were able to find the issue and resolve the issue. Additionally, Isabel was able to resolve an issue with the radxconvert and hawkeye processes that fixed the delayed data transfer that was causing the lidar errors in the ISS1 Nagios.

Clear and sunny today inland. Warm at the ISS2 site, with a high of 88 F, though with a nice breeze developing in the afternoon.

All ISS systems were running throughout the day, however ISS3 is not responding as of 2200 PDT and there is a post from Leila in the Slack channel about a large power outage north of CA 154 that is affecting Sedgwick. I have not been able to find any information online about the outage, though I have pinged Leila on Slack for her source of information. Will work on getting more info and will likely have to head out to ISS3 first thing in the morning if systems have not come back online.

Laura launched the 1000 balloon with the help of Cliff (UCAR Enterprise Risk Management) plus Brigitte and a couple of her friends that live in Santa Barbara. I arrived just after the 10 am launch and we launched the remaining soundings at 1130, 1300, 1600, and 1900 PDT. The sounding system did not recognize the sonde prior to the 1130 launch. The sounding computer and Vaisala receiver were rebooted to resolve the issue, but resulted in the launch being a few minutes late.

There were many failing nagios checks on iss3, but I think the problem was that the catalog database was not updating. My theory is that the ceilometer PC changed the mtime property on some (many) of the camera images, and rsync copied those mtime changes to the data manager, and that caused the catalog to think all those images had changed and needed to be rescanned. There are about 32000 camera images, and it looks like 20,000 had to be rescanned.

After finally completing the rescan, subsequent rescans are now much faster again, and the only checks failing now are for the ceilometer and allsky camera. So there is still an issue with the ceilometer PC. Right now rsync connections to it are failing.

Cloudy in the morning but clear by mid-day. A very pleasant afternoon with highs in the upper 60's/lower 70's again.

Laura went with Chris and the Enterprise Risk Management person (Cliff??) to various sites today, and I believe they were joined by Terry and Brigitte who were up from Oxnard today. The WV at ISS2 was acting up again on Nagios, so I asked Laura to check the cables when they were there. All cables were seated just fine, so we power cycled the Trimble box and that seemed to work. I only power cycled the sensor when I was out there a couple of days ago. Will keep an eye on it and repeat the procedure if problem persists. I did spend some time today looking for the screw pins that hold the DB9 connector to the back of the Trimble, as they are missing currently and the cable is being held on by two zip ties. No luck at any hardware store or the local Best Buy. I will look into ordering some from Digikey if I can't find a substitute at the hardware store so as to eliminate a poor DB9 connection as a possible error source.

At ISS3, Laura found an error message on the ceilometer monitor and it appeared that the hard drive, or at lease part of it, had reached capacity. See comments in this thread for details: iss3 data check and ceilometer PC problems. Cleaning out the C:\temp folder on the computer, rebooting, and restarting software has resolved the issue as of drive 1930 PDT this evening. Again, will monitor and repeat procedure if problem persists.

I stopped by ISS1 late this afternoon to the check on the lidar as there were several red errors in Nagios again. The system was scanning as I approached and the tool running in Chrome on the laptop (forget its name) showed that the servers of the lidar were also running. Bill did some remote investigation and determined nothing was askew. It looks as those there is some lag in the data getting moved about, so the errors in Nagios are not critical. I will wait for guidance from Bill (or someone else) if and when these become an issue that needs attention.