Data Analysis Services Group - July 2011

News and Accomplishments

Two animations produced with VAPOR won Peoples Choice awards at the annual SCIDac meeting. The animations are available from:

http://www.vapor.ucar.edu/images/gallery/movies/NRELWindTurbine.mov

http://dl.dropbox.com/u/34635101/scidac2011_small.mov

More information is available from http://press.mcs.anl.gov/scidac2011/visualization-night/visualization-night-winners

VAPOR Project

Project information is available at: http://www.vapor.ucar.edu

TG GIG PY6 Award:

Yannick believes he has resolved all outstanding issues with the PIOVDC development and is now testing a variety of pathological cases (e.g. data without block-aligned boundaries)

XD Vis Award:

Alan prepared materials for a tutorial on VAPOR to be given at the TACC Summer Supercomputing Institute in early August.

Kendall completed work on updating the VisIt plug-in for VAPOR Data Collection reader. John tested the plugin on Mac and Linux platforms. The code has been documented and added to the VAPOR distribution.

Yannick continued prototype work on depth peeling.

Yannick completed dynamic shader loading integration with VAPOR. John tested the code, and added an installer for the shader programs.

Development:

Progress continues towards the 2.1 release, with a planned completion of all development by the first week of August.

- Support for VDC2 output was added to WRF and netCDF conversion utilities. Similarly, vaporgui was extended to be able to read layered grids with VDC2 data encodings.

- The new "hedge hog" (wind bars) visualizer was completed

- A few niceties were added to the new 3D geometric model import capability, such as specification of scene files using relative path names. Also a couple of minor bugs in the memory cache were uncovered and corrected.

Outreach and Consulting:

Alan consulted with students at the WRF Tutorial that was held the week of July 9. It went well but we had a few problems with making the installation current (using vapor 2.0.2). The documentation we provide is also out of date and needs to be updated.

Arlene Laing and Sherrie Fredrick were planning to present a VAPOR demo of African weather patterns at an African weather conference in August. However they were not able to fit this in their schedule so we are tentatively planning to show VAPOR separately to some of the attendees on August 4.

Alan met with Mel Shapiro and worked on unsteady flow visualization of ERICA as well as several new visualizations. We made an animation combining reflectivity and cloud top temperature, available at http://vis.ucar.edu/~alan/shapiro/cttdbz.mov. Mel is particularly interested in the unsteady flow visualization such as shown at http://vis.ucar.edu/~alan/shapiro/westUnsteady.mov .

Hsio-ming Hsu is now in Germany (for a month) and continues to work on unsteady trajectories associated with a European pollution event. Alan provided him with 3 movies (at http://vis.ucar.edu/~alan/hsu) to discuss with Hsiaoming’s collaborators.

Mary Barth of ACD expressed an interest in a VAPOR tutorial based on WRF-CHEM. She pointed us to a dataset that we converted for visualization. The only difference with WRF-CHEM versus WRF-ARW is that the chemistry data has lots of variables so they tend to not write all the output into one file.

John spent an afternoon with Dr Yang, Korean U. of Kyungwon, discussing visualization tools for climate and weather data

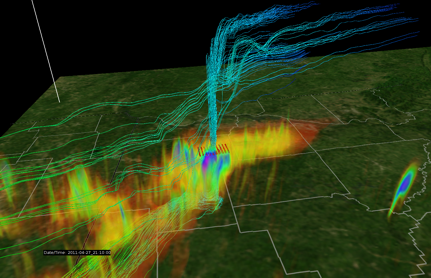

Alan worked with Wanli Wu on a simulation of the tornados that occurred in the eastern US at the end of April. His simulation is on a 300m grid and shows an actual tornado, as in the following image showing streamlines through a tornado vortex:

KISTI Proposal:

NCAR and KISTI administrators have at last come to agreement on a contract. The contract has been signed by KISTI and is now under final review by NCAR, expected to executed in a matter of days. In anticipation of an award, whose first set of deliverables are due at the end of November of this year, VAPOR staff continue preliminary efforts:

- We have been working with Frank Bryan, a formal collaborator on the proposal, to show him the ocean visualization capabilities that Karamjeet has put together, and to get additional feedback. Frank noted several things that are important such as using a height field other than ocean depth, and avoiding the problems associated with fill_values in the data. Karamjeet made several changes in his scripts to improve the resulting visualizations.

- Frank Bryan arranged for us to present VAPOR’s ocean visualization capabilities at his section meeting on July 26. Karamjeet showed the capabilities he has developed and this sparked a lot of interest and a valuable discussion. Karamjeet also presented his work at his Siparcs final presentation.

- Karamjeet has documented his Python POP2VDF scripts, and made a number of changes that will be useful for converting POPdata.

- John continued efforts to integrate the new Regular Grid abstract data model into vaporgui. The new model is now fully functional with the DVR and isosurface visualizers.

Admin:

John authored the TeraGrid quarterly report.

Data Analysis & Visualization Lab Projects

File System Space Management Project

- Continued work on FMU development and documentation with accounting data gathering and reporting functionally as the first goal. Figured out a number of issues related to multiply linked files.

Security & Administration Projects

- Modified the KROLE management utilities run time configuration to work around problems with some LDAP server tables not being updated consistently from People DB 2 due to work flow issues. These issues are to be addressed by yet another project scheduled to start in August.

- Updated the Wiki troubleshoot section on DDN controllers.

System Monitoring Project

- Rewrote the Nagios script that checks the status of GPFS and the GLADE filesystems to use GPFS commands (mmlsmount) instead of df, etc.

- Removed some unimportant service checks in Nagios (total number of processes, etc.) to help clean up the amount of services we monitor on each system.

- Added fan, temperature, and power checks for the second controllers on the DDN 9550 and 9900 storage systems in Nagios, only the first controllers were being checked previously.

- Compiled the latest release of Nagios to see if we can upgrade without changing our configuration.

CISL Projects

Lustre Project

- After message from Whamcloud, downloaded their first official release of 1.8.6 and installed it on Lustre servers(MDS + four OSS). Functionality tests after upgrade were successful.

NWSC Planning

- Revised the NWSC TET spreadsheet based on FRP and submitted it.

- Prepared and presented a presentation on transition plans for GLADE.

- Prepared and revised transition documentation.

System Support

Data Analysis & Visualization Clusters

- Compiled and installed netCDF-4.1.3 on all systems using both GCC and Intel compilers.

- Worked with Junseong Heo to replace a failed drive in the DDN 9900 storage system and to convert the spare tiers into usable 8+2 tiers.

- Ordered the free replacement power supplies for the Bladecenter from HP, due to a recall where certain power supplies had a very high risk of failure.

- Herded the ccsm group KROLE ExtraView request to completion so the CCSM group can use a common HPSS acount for file management.

- Created a directory in /glade/user for bchen.

- Created 76 new accounts for CESM tutorial upon ticket #65899.

GLADE Storage Cluster

- Created the wash1184 project space.

- The glade003 project space has been removed.

- Updated the gladequotas utility so that CSG can run it with sudo in order to check a specified user's quota on the /glade/home and /glade/user filesystems.

- DDN controller time correction. Noticing persistent shift in time off by an hour despite synchronizing to a local NTP server, manually adjusted the "UTC_offset" to match DDN time stamps to the system clocks in DASG. This is important when we troubleshoot the GPFS issues trying to reconstruct the failure sequence based on timestamps. All four DDN controllers now report consistent time.

- Manually rebuilt disk 30C on oasisb after July 3 failure due to "DISK COMMAND TIMEOUT" errors.

- Responded to oasis6 crash with a reboot and IB init procedure. We responded quickly enough to prevent the propagation of file system error to clients.

- Responded to SCSI error on oasis5 due to a read error on disk 28C (ddn9900 lower controller) which propagated to servers. Performed mmnsddiscover on servers. Bluefire clients had to remount the affected /glade/data02 (containing LUN28).

- Responded to another SCSI error on GPFS servers caused by disk errors on 21H. Check on clients states showed that mirage5 had issue and remounted /glade/data02 on it successfully. Again we were able to respond quickly enough to limit the error propagation beyond DASG nodes. No user noticed the errors.

- Replaced the 9A disk on DDN9900 and rebuild was complete by 11:05PM.